Introduction

Miden VM is a zero-knowledge virtual machine written in Rust. For any program executed on Miden VM, a STARK-based proof of execution is automatically generated. This proof can then be used by anyone to verify that the program was executed correctly without the need for re-executing the program or even knowing the contents of the program.

Status and features

Miden VM is currently on release v0.14. In this release, most of the core features of the VM have been stabilized, and most of the STARK proof generation has been implemented. While we expect to keep making changes to the VM internals, the external interfaces should remain relatively stable, and we will do our best to minimize the amount of breaking changes going forward.

At this point, Miden VM is good enough for experimentation, and even for real-world applications, but it is not yet ready for production use. The codebase has not been audited and contains known and unknown bugs and security flaws.

Feature highlights

Miden VM is a fully-featured virtual machine. Despite being optimized for zero-knowledge proof generation, it provides all the features one would expect from a regular VM. To highlight a few:

- Flow control. Miden VM is Turing-complete and supports familiar flow control structures such as conditional statements and counter/condition-controlled loops. There are no restrictions on the maximum number of loop iterations or the depth of control flow logic.

- Procedures. Miden assembly programs can be broken into subroutines called procedures. This improves code modularity and helps reduce the size of Miden VM programs.

- Execution contexts. Miden VM program execution can span multiple isolated contexts, each with its own dedicated memory space. The contexts are separated into the root context and user contexts. The root context can be accessed from user contexts via customizable kernel calls.

- Memory. Miden VM supports read-write random-access memory. Procedures can reserve portions of global memory for easier management of local variables.

- u32 operations. Miden VM supports native operations with 32-bit unsigned integers. This includes basic arithmetic, comparison, and bitwise operations.

- Cryptographic operations. Miden assembly provides built-in instructions for computing hashes and verifying Merkle paths. These instructions use Rescue Prime Optimized hash function (which is the native hash function of the VM).

- External libraries. Miden VM supports compiling programs against pre-defined libraries. The VM ships with one such library: Miden

stdlibwhich adds support for such things as 64-bit unsigned integers. Developers can build other similar libraries to extend the VM's functionality in ways which fit their use cases. - Nondeterminism. Unlike traditional virtual machines, Miden VM supports nondeterministic programming. This means a prover may do additional work outside of the VM and then provide execution hints to the VM. These hints can be used to dramatically speed up certain types of computations, as well as to supply secret inputs to the VM.

- Customizable hosts. Miden VM can be instantiated with user-defined hosts. These hosts are used to supply external data to the VM during execution/proof generation (via nondeterministic inputs) and can connect the VM to arbitrary data sources (e.g., a database or RPC calls).

Planned features

In the coming months we plan to finalize the design of the VM and implement support for the following features:

- Recursive proofs. Miden VM will soon be able to verify a proof of its own execution. This will enable infinitely recursive proofs, an extremely useful tool for real-world applications.

- Better debugging. Miden VM will provide a better debugging experience including the ability to place breakpoints, better source mapping, and more complete program analysis info.

- Faulty execution. Miden VM will support generating proofs for programs with faulty execution (a notoriously complex task in ZK context). That is, it will be possible to prove that execution of some program resulted in an error.

Structure of this document

This document is meant to provide an in-depth description of Miden VM. It is organized as follows:

- In the introduction, we provide a high-level overview of Miden VM and describe how to run simple programs.

- In the user documentation section, we provide developer-focused documentation useful to those who want to develop on Miden VM or build compilers from higher-level languages to Miden assembly (the native language of Miden VM).

- In the design section, we provide in-depth descriptions of the VM's internals, including all AIR constraints for the proving system. We also provide the rationale for settling on specific design choices.

- Finally, in the background material section, we provide references to materials which could be useful for learning more about STARKs - the proving system behind Miden VM.

License

Licensed under the MIT license.

Miden VM overview

Miden VM is a stack machine. The base data type of the MV is a field element in a 64-bit prime field defined by modulus . This means that all values that the VM operates with are field elements in this field (i.e., values between and , both inclusive).

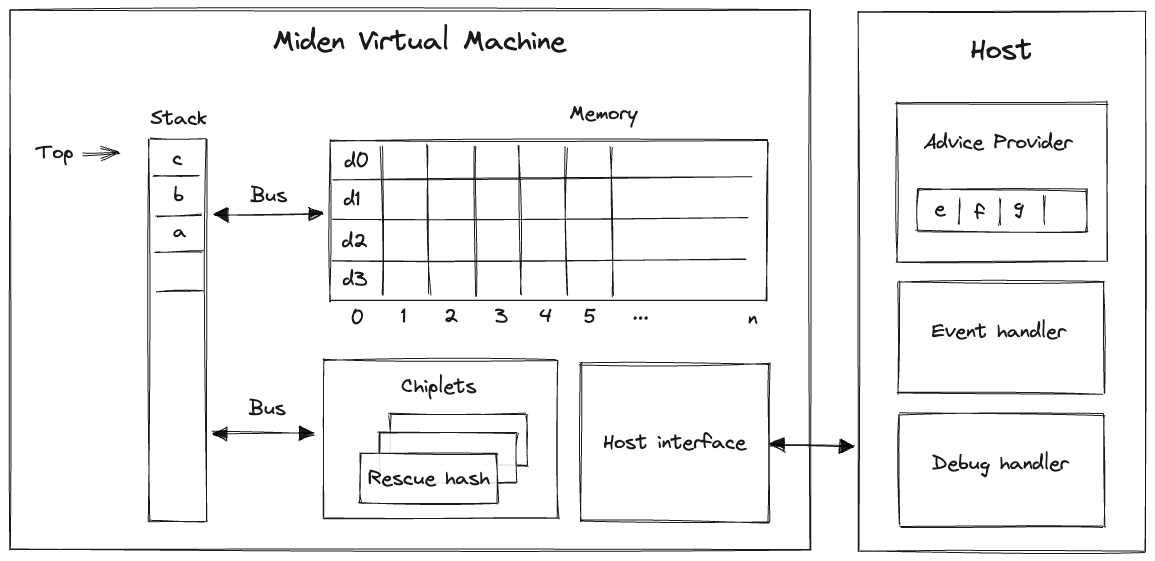

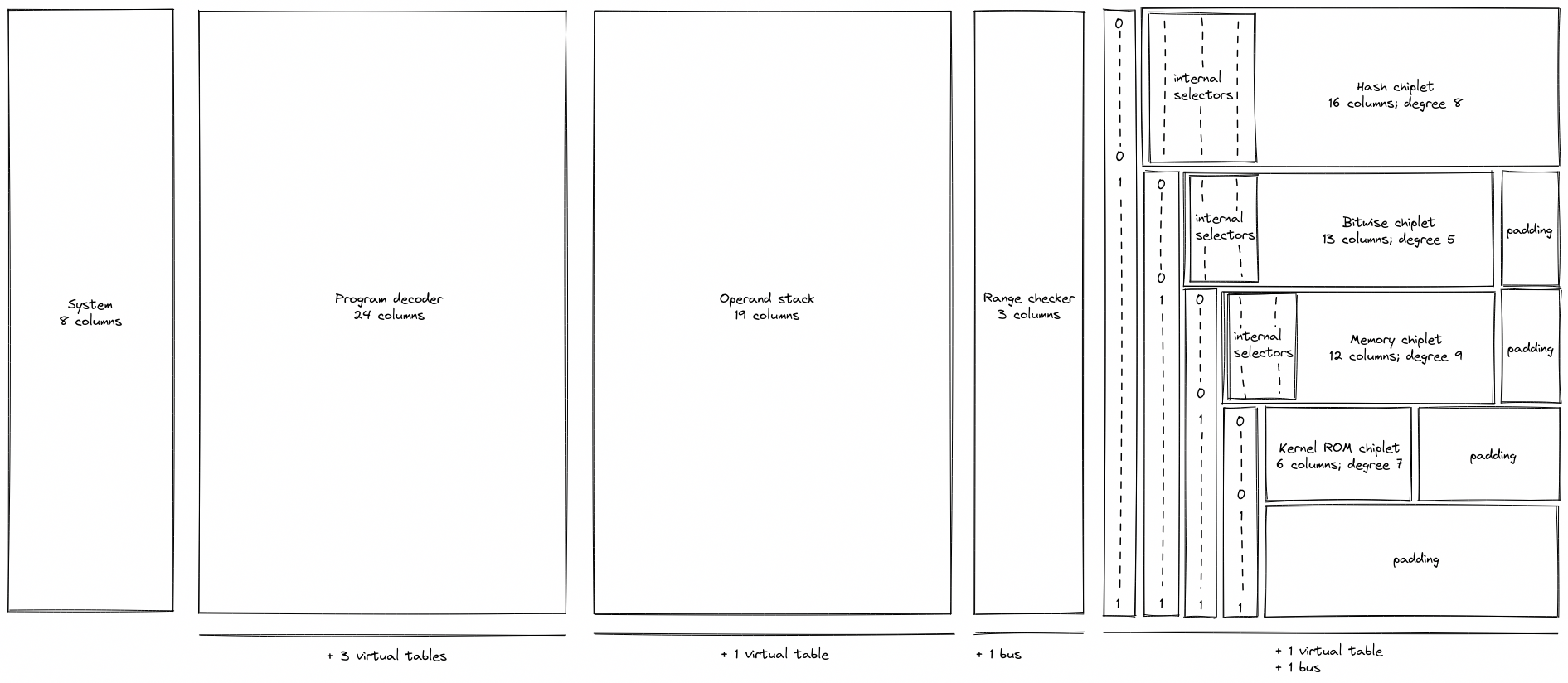

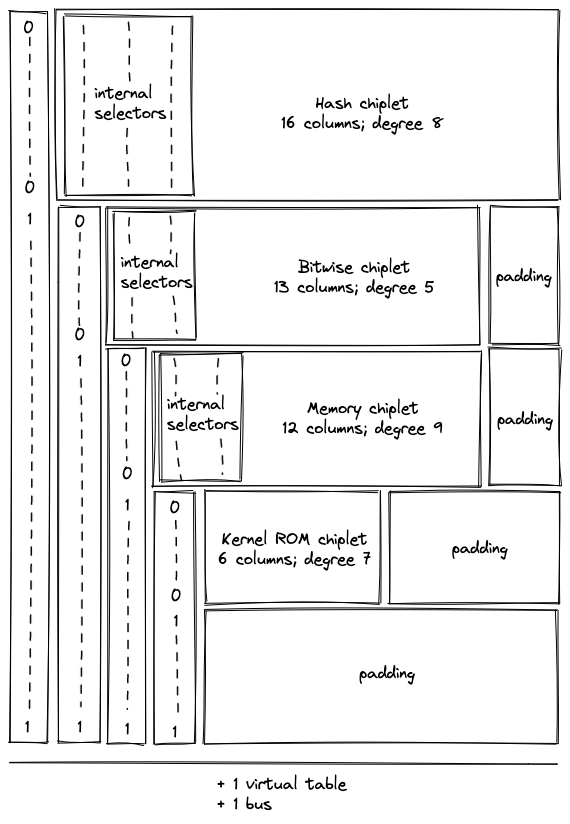

Miden VM consists of four high-level components as illustrated below.

These components are:

- Stack which is a push-down stack where each item is a field element. Most assembly instructions operate with values located on the stack. The stack can grow up to items deep, however, only the top 16 items are directly accessible.

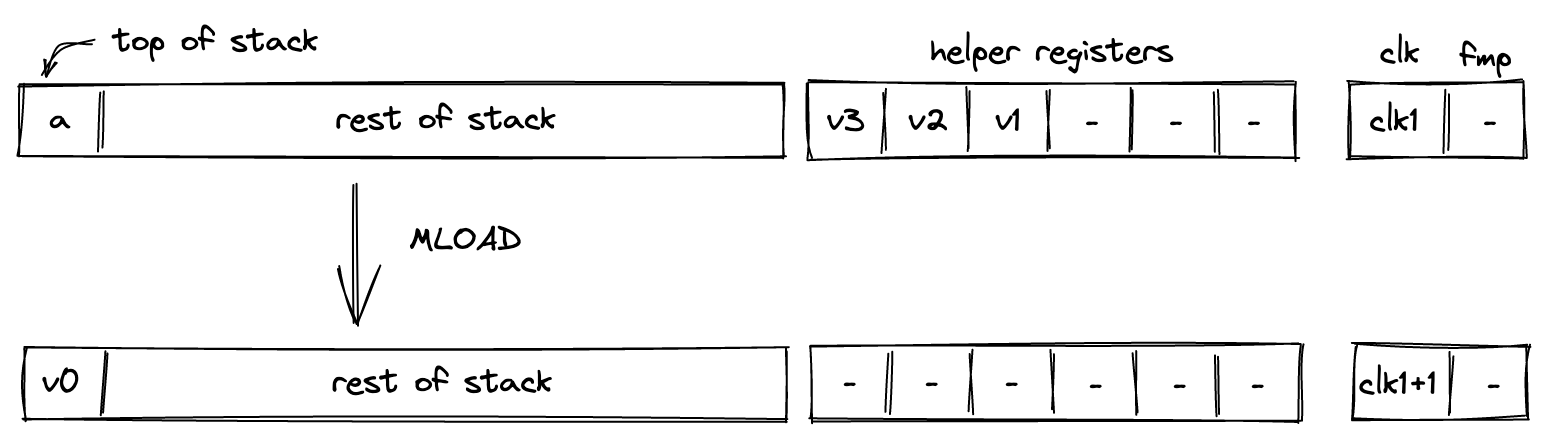

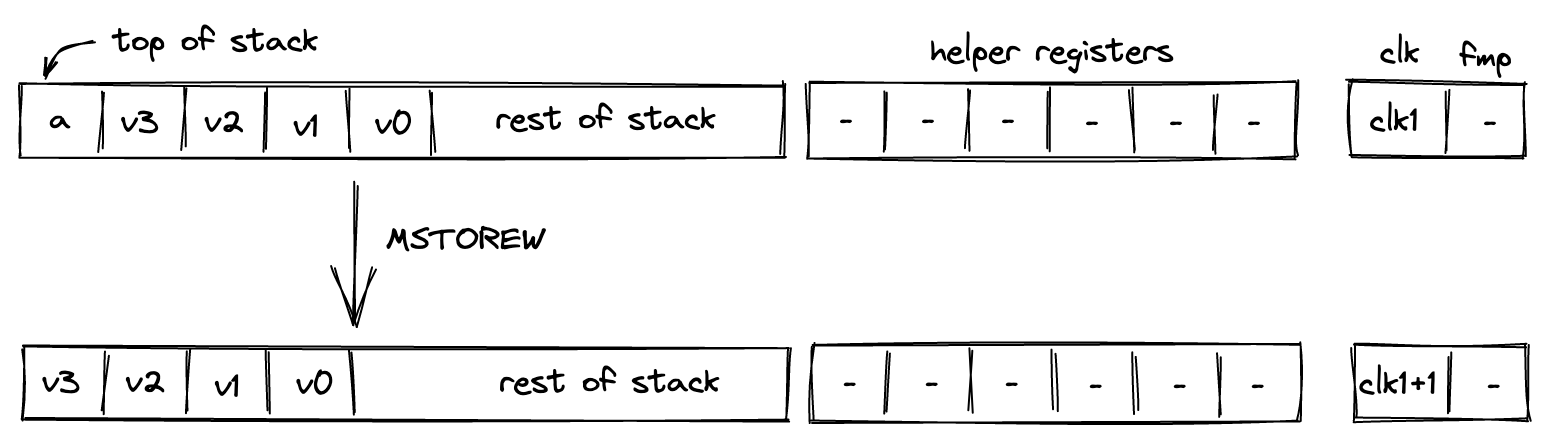

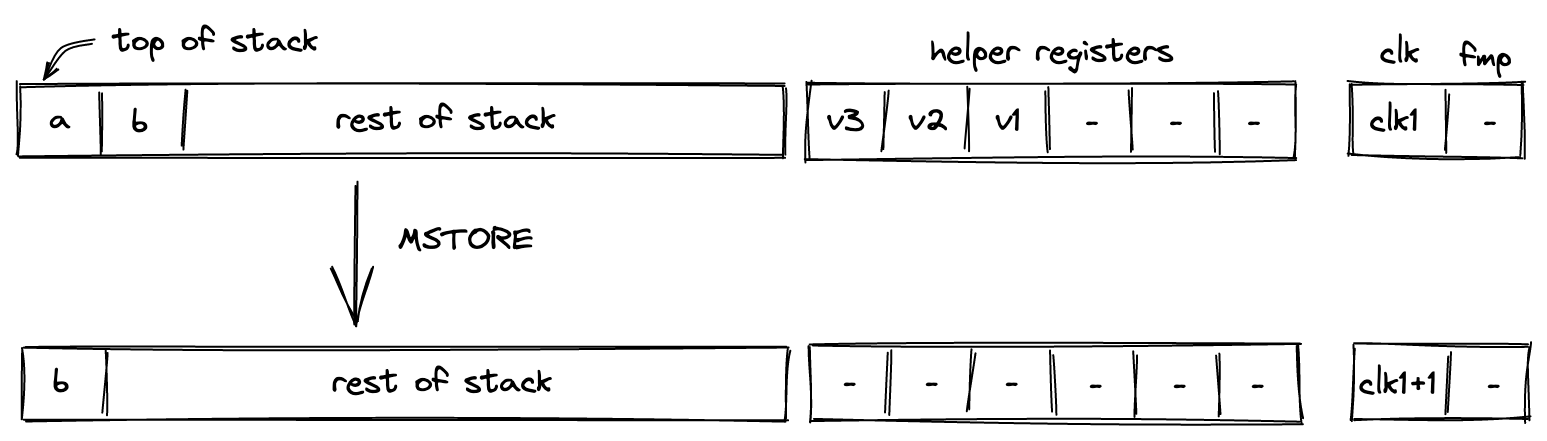

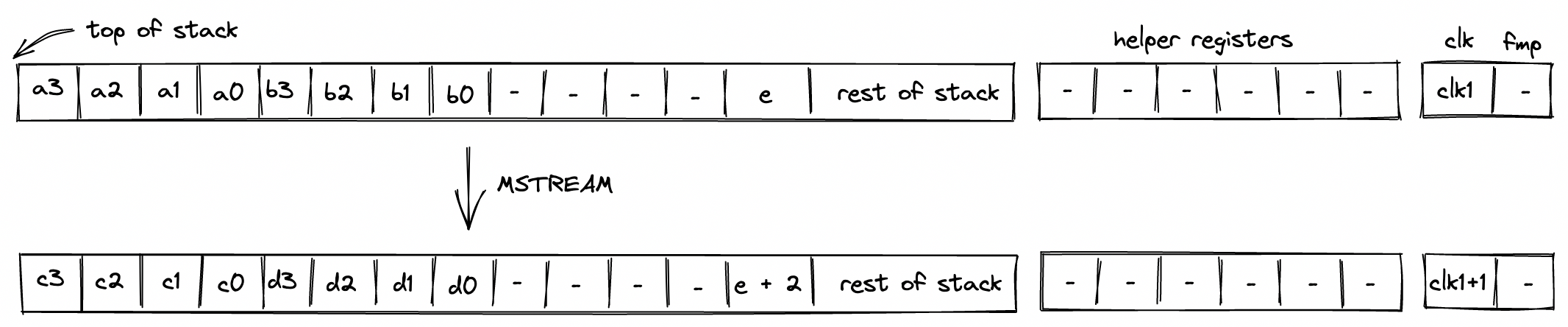

- Memory which is a linear random-access read-write memory. The memory is element-addressable, meaning, a single element is located at each address. However, there are instructions to read and write elements to/from memory both individually or in batches of four, since the latter is quite common. Memory addresses can be in the range .

- Chiplets which are specialized circuits for accelerating certain types of computations. These include Rescue Prime Optimized (RPO) hash function, 32-bit binary operations, and 16-bit range checks.

- Host which is a way for the prover to communicate with the VM during runtime. This includes responding to the VM's requests for non-deterministic inputs and handling messages sent by the VM (e.g., for debugging purposes). The requests for non-deterministic inputs are handled by the host's advice provider.

Miden VM comes with a default implementation of the host interface (with an in-memory advice provider). However, the users are able to provide their own implementations which can connect the VM to arbitrary data sources (e.g., a database or RPC calls) and define custom logic for handling events emitted by the VM.

Writing programs

Our goal is to make Miden VM an easy compilation target for high-level languages such as Rust, Move, Sway, and others. We believe it is important to let people write programs in the languages of their choice. However, compilers to help with this have not been developed yet. Thus, for now, the primary way to write programs for Miden VM is to use Miden assembly.

While writing programs in assembly is far from ideal, Miden assembly does make this task a little bit easier by supporting high-level flow control structures and named procedures.

Inputs and outputs

External inputs can be provided to Miden VM in two ways:

- Public inputs can be supplied to the VM by initializing the stack with desired values before a program starts executing. At most 16 values can be initialized in this way, so providing more than 16 values will cause an error.

- Secret (or nondeterministic) inputs can be supplied to the VM via the advice provider. There is no limit on how much data the advice provider can hold.

After a program finishes executing, the elements remaining on the stack become the outputs of the program. Notice that having more than 16 values on the stack at the end of execution will cause an error, so the values beyond the top 16 elements of the stack should be dropped. We've provided the truncate_stack utility procedure in the standard library for this purpose.

The number of public inputs and outputs of a program can be reduced by making use of the advice stack and Merkle trees. Just 4 elements are sufficient to represent a root of a Merkle tree, which can be expanded into an arbitrary number of values.

For example, if we wanted to provide a thousand public input values to the VM, we could put these values into a Merkle tree, initialize the stack with the root of this tree, initialize the advice provider with the tree itself, and then retrieve values from the tree during program execution using mtree_get instruction (described here).

Stack depth restrictions

For reasons explained here, the VM imposes the restriction that the stack depth cannot be smaller than . This has the following effects:

- When initializing a program with fewer than inputs, the VM will pad the stack with zeros to ensure the depth is at the beginning of execution.

- If an operation would result in the stack depth dropping below , the VM will insert a zero at the deep end of the stack to make sure the depth stays at .

Nondeterministic inputs

The advice provider component is responsible for supplying nondeterministic inputs to the VM. These inputs only need to be known to the prover (i.e., they do not need to be shared with the verifier).

The advice provider consists of three components:

- Advice stack which is a one-dimensional array of field elements. Being a stack, the VM can either push new elements onto the advice stack, or pop the elements from its top.

- Advice map which is a key-value map where keys are words and values are vectors of field elements. The VM can copy values from the advice map onto the advice stack as well as insert new values into the advice map (e.g., from a region of memory).

- Merkle store which contain structured data reducible to Merkle paths. Some examples of such structures are: Merkle tree, Sparse Merkle Tree, and a collection of Merkle paths. The VM can request Merkle paths from the Merkle store, as well as mutate it by updating or merging nodes contained in the store.

The prover initializes the advice provider prior to executing a program, and from that point on the advice provider is manipulated solely by executing operations on the VM.

Usage

Before you can use Miden VM, you'll need to make sure you have Rust installed. Miden VM v0.14 requires Rust version 1.85 or later.

Miden VM consists of several crates, each of which exposes a small set of functionality. The most notable of these crates are:

- miden-processor, which can be used to execute Miden VM programs.

- miden-prover, which can be used to execute Miden VM programs and generate proofs of their execution.

- miden-verifier, which can be used to verify proofs of program execution generated by Miden VM prover.

The above functionality is also exposed via the single miden-vm crate, which also provides a CLI interface for interacting with Miden VM.

CLI interface

Compiling Miden VM

To compile Miden VM into a binary, we have a Makefile with the following tasks:

make exec

This will place an optimized, multi-threaded miden executable into the ./target/optimized directory. It is equivalent to executing:

cargo build --profile optimized --features concurrent,executable

If you would like to enable single-threaded mode, you can compile Miden VM using the following command:

make exec-single

Controlling parallelism

Internally, Miden VM uses rayon for parallel computations. To control the number of threads used to generate a STARK proof, you can use RAYON_NUM_THREADS environment variable.

GPU acceleration

Miden VM proof generation can be accelerated via GPUs. Currently, GPU acceleration is enabled only on Apple Silicon hardware (via Metal). To compile Miden VM with Metal acceleration enabled, you can run the following command:

make exec-metal

Similar to make exec command, this will place the resulting miden executable into the ./target/optimized directory.

Currently, GPU acceleration is applicable only to recursive proofs which can be generated using the -r flag.

SIMD acceleration

Miden VM execution and proof generation can be accelerated via vectorized instructions. Currently, SIMD acceleration can be enabled on platforms supporting SVE and AVX2 instructions.

To compile Miden VM with AVX2 acceleration enabled, you can run the following command:

make exec-avx2

To compile Miden VM with SVE acceleration enabled, you can run the following command:

make exec-sve

This will place the resulting miden executable into the ./target/optimized directory.

Similar to Metal acceleration, SVE/AVX2 acceleration is currently applicable only to recursive proofs which can be generated using the -r flag.

Running Miden VM

Once the executable has been compiled, you can run Miden VM like so:

./target/optimized/miden [subcommand] [parameters]

Currently, Miden VM can be executed with the following subcommands:

run- this will execute a Miden assembly program and output the result, but will not generate a proof of execution.prove- this will execute a Miden assembly program, and will also generate a STARK proof of execution.verify- this will verify a previously generated proof of execution for a given program.compile- this will compile a Miden assembly program (i.e., build a program MAST) and outputs stats about the compilation process.debug- this will instantiate a Miden debugger against the specified Miden assembly program and inputs.analyze- this will run a Miden assembly program against specific inputs and will output stats about its execution.repl- this will initiate the Miden REPL tool.example- this will execute a Miden assembly example program, generate a STARK proof of execution and verify it. Currently, it is possible to runblake3andfibonacciexamples.

All of the above subcommands require various parameters to be provided. To get more detailed help on what is needed for a given subcommand, you can run the following:

./target/optimized/miden [subcommand] --help

For example:

./target/optimized/miden prove --help

To execute a program using the Miden VM there needs to be a .masm file containing the Miden Assembly code and a .inputs file containing the inputs.

Enabling logging

You can use MIDEN_LOG environment variable to control how much logging output the VM produces. For example:

MIDEN_LOG=trace ./target/optimized/miden [subcommand] [parameters]

If the level is not specified, warn level is set as default.

Enable Debugging features

You can use the run command with --debug parameter to enable debugging with the debug instruction such as debug.stack:

./target/optimized/miden run [path_to.masm] --debug

Inputs

As described here the Miden VM can consume public and secret inputs.

- Public inputs:

operand_stack- can be supplied to the VM to initialize the stack with the desired values before a program starts executing. If the number of provided input values is less than 16, the input stack will be padded with zeros to the length of 16. The maximum number of the stack inputs is limited by 16 values, providing more than 16 values will cause an error.

- Secret (or nondeterministic) inputs:

advice_stack- can be supplied to the VM. There is no limit on how much data the advice provider can hold. This is provided as a string array where each string entry represents a field element.advice_map- is supplied as a map of 64-character hex keys, each mapped to an array of numbers. The hex keys are interpreted as 4 field elements and the arrays of numbers are interpreted as arrays of field elements.merkle_store- the Merkle store is container that allows the user to definemerkle_tree,sparse_merkle_treeandpartial_merkle_treedata structures.merkle_tree- is supplied as an array of 64-character hex values where each value represents a leaf (4 elements) in the tree.sparse_merkle_tree- is supplied as an array of tuples of the form (number, 64-character hex string). The number represents the leaf index and the hex string represents the leaf value (4 elements).partial_merkle_tree- is supplied as an array of tuples of the form ((number, number), 64-character hex string). The internal tuple represents the leaf depth and index at this depth, and the hex string represents the leaf value (4 elements).

Check out the comparison example to see how secret inputs work.

After a program finishes executing, the elements that remain on the stack become the outputs of the program. Notice that the number of values on the operand stack at the end of the program execution can not be greater than 16, otherwise the program will return an error. The truncate_stack utility procedure from the standard library could be used to conveniently truncate the stack at the end of the program.

Fibonacci example

In the miden/masm-examples/fib directory, we provide a very simple Fibonacci calculator example. This example computes the 1001st term of the Fibonacci sequence. You can execute this example on Miden VM like so:

./target/optimized/miden run miden/masm-examples/fib/fib.masm

Capturing Output

This will run the example code to completion and will output the top element remaining on the stack.

If you want the output of the program in a file, you can use the --output or -o flag and specify the path to the output file. For example:

./target/optimized/miden run miden/masm-examples/fib/fib.masm -o fib.out

This will dump the output of the program into the fib.out file. The output file will contain the state of the stack at the end of the program execution.

Running with debug instruction enabled

Inside miden/masm-examples/fib/fib.masm, insert debug.stack instruction anywhere between begin and end. Then run:

./target/optimized/miden run miden/masm-examples/fib/fib.masm -n 1 --debug

You should see output similar to "Stack state before step ..."

Performance

The benchmarks below should be viewed only as a rough guide for expected future performance. The reasons for this are twofold:

- Not all constraints have been implemented yet, and we expect that there will be some slowdown once constraint evaluation is completed.

- Many optimizations have not been applied yet, and we expect that there will be some speedup once we dedicate some time to performance optimizations.

Overall, we don't expect the benchmarks to change significantly, but there will definitely be some deviation from the below numbers in the future.

A few general notes on performance:

- Execution time is dominated by proof generation time. In fact, the time needed to run the program is usually under 1% of the time needed to generate the proof.

- Proof verification time is really fast. In most cases it is under 1 ms, but sometimes gets as high as 2 ms or 3 ms.

- Proof generation process is dynamically adjustable. In general, there is a trade-off between execution time, proof size, and security level (i.e. for a given security level, we can reduce proof size by increasing execution time, up to a point).

- Both proof generation and proof verification times are greatly influenced by the hash function used in the STARK protocol. In the benchmarks below, we use BLAKE3, which is a really fast hash function.

Single-core prover performance

When executed on a single CPU core, the current version of Miden VM operates at around 20 - 25 KHz. In the benchmarks below, the VM executes a Fibonacci calculator program on Apple M1 Pro CPU in a single thread. The generated proofs have a target security level of 96 bits.

| VM cycles | Execution time | Proving time | RAM consumed | Proof size |

|---|---|---|---|---|

| 210 | 1 ms | 60 ms | 20 MB | 46 KB |

| 212 | 2 ms | 180 ms | 52 MB | 56 KB |

| 214 | 8 ms | 680 ms | 240 MB | 65 KB |

| 216 | 28 ms | 2.7 sec | 950 MB | 75 KB |

| 218 | 81 ms | 11.4 sec | 3.7 GB | 87 KB |

| 220 | 310 ms | 47.5 sec | 14 GB | 100 KB |

As can be seen from the above, proving time roughly doubles with every doubling in the number of cycles, but proof size grows much slower.

We can also generate proofs at a higher security level. The cost of doing so is roughly doubling of proving time and roughly 40% increase in proof size. In the benchmarks below, the same Fibonacci calculator program was executed on Apple M1 Pro CPU at 128-bit target security level:

| VM cycles | Execution time | Proving time | RAM consumed | Proof size |

|---|---|---|---|---|

| 210 | 1 ms | 120 ms | 30 MB | 61 KB |

| 212 | 2 ms | 460 ms | 106 MB | 77 KB |

| 214 | 8 ms | 1.4 sec | 500 MB | 90 KB |

| 216 | 27 ms | 4.9 sec | 2.0 GB | 103 KB |

| 218 | 81 ms | 20.1 sec | 8.0 GB | 121 KB |

| 220 | 310 ms | 90.3 sec | 20.0 GB | 138 KB |

Multi-core prover performance

STARK proof generation is massively parallelizable. Thus, by taking advantage of multiple CPU cores we can dramatically reduce proof generation time. For example, when executed on an 8-core CPU (Apple M1 Pro), the current version of Miden VM operates at around 100 KHz. And when executed on a 64-core CPU (Amazon Graviton 3), the VM operates at around 250 KHz.

In the benchmarks below, the VM executes the same Fibonacci calculator program for 220 cycles at 96-bit target security level:

| Machine | Execution time | Proving time | Execution % | Implied Frequency |

|---|---|---|---|---|

| Apple M1 Pro (16 threads) | 310 ms | 7.0 sec | 4.2% | 140 KHz |

| Apple M2 Max (16 threads) | 280 ms | 5.8 sec | 4.5% | 170 KHz |

| AMD Ryzen 9 5950X (16 threads) | 270 ms | 10.0 sec | 2.6% | 100 KHz |

| Amazon Graviton 3 (64 threads) | 330 ms | 3.6 sec | 8.5% | 265 KHz |

Recursive proofs

Proofs in the above benchmarks are generated using BLAKE3 hash function. While this hash function is very fast, it is not very efficient to execute in Miden VM. Thus, proofs generated using BLAKE3 are not well-suited for recursive proof verification. To support efficient recursive proofs, we need to use an arithmetization-friendly hash function. Miden VM natively supports Rescue Prime Optimized (RPO), which is one such hash function. One of the downsides of arithmetization-friendly hash functions is that they are considerably slower than regular hash functions.

In the benchmarks below we execute the same Fibonacci calculator program for 220 cycles at 96-bit target security level using RPO hash function instead of BLAKE3:

| Machine | Execution time | Proving time | Proving time (HW) |

|---|---|---|---|

| Apple M1 Pro (16 threads) | 310 ms | 94.3 sec | 42.0 sec |

| Apple M2 Max (16 threads) | 280 ms | 75.1 sec | 20.9 sec |

| AMD Ryzen 9 5950X (16 threads) | 270 ms | 59.3 sec | |

| Amazon Graviton 3 (64 threads) | 330 ms | 21.7 sec | 14.9 sec |

In the above, proof generation on some platforms can be hardware-accelerated. Specifically:

- On Apple M1/M2 platforms the built-in GPU is used for a part of proof generation process.

- On the Graviton platform, SVE vector extension is used to accelerate RPO computations.

Development Tools and Resources

The following tools are available for interacting with Miden VM:

- Via the miden-vm crate (or within the Miden VM repo):

- Via your browser:

- The interactive Miden VM Playground for writing, executing, proving, and verifying programs from your browser.

The following resources are available to help you get started programming with Miden VM more quickly:

- The Miden VM examples repo contains examples of programs written in Miden Assembly.

- Our Scaffolded repo can be cloned for starting a new Rust project using Miden VM.

Miden Debugger

The Miden debugger is a command-line interface (CLI) application, inspired by GNU gdb, which allows debugging of Miden assembly (MASM) programs. The debugger allows the user to step through the execution of the program, both forward and backward, either per clock cycle tick, or via breakpoints.

The Miden debugger supports the following commands:

| Command | Shortcut | Arguments | Description |

|---|---|---|---|

| next | n | count? | Steps count clock cycles. Will step 1 cycle of count is omitted. |

| continue | c | - | Executes the program until completion, failure or a breakpoint. |

| back | b | count? | Backward step count clock cycles. Will back-step 1 cycle of count is omitted. |

| rewind | r | - | Executes the program backwards until the beginning, failure or a breakpoint. |

| p | - | Displays the complete state of the virtual machine. | |

| print mem | p m | address? | Displays the memory value at address. If address is omitted, didisplays all the memory values. |

| print stack | p s | index? | Displays the stack value at index. If index is omitted, displays all the stack values. |

| clock | c | - | Displays the current clock cycle. |

| quit | q | - | Quits the debugger. |

| help | h | - | Displays the help message. |

In order to start debugging, the user should provide a MASM program:

cargo run --features executable -- debug --assembly miden/masm-examples/nprime/nprime.masm

The expected output is:

============================================================

Debug program

============================================================

Reading program file `miden/masm-examples/nprime/nprime.masm`

Compiling program... done (16 ms)

Debugging program with hash 11dbbddff27e26e48be3198133df8cbed6c5875d0fb

606c9f037c7893fde4118...

Reading input file `miden/masm-examples/nprime/nprime.inputs`

Welcome! Enter `h` for help.

>>

In order to add a breakpoint, the user should insert a breakpoint instruction into the MASM file. This will generate a Noop operation that will be decorated with the debug break configuration. This is a provisory solution until the source mapping is implemented.

The following example will halt on the third instruction of foo:

proc.foo

dup

dup.2

breakpoint

swap

add.1

end

begin

exec.foo

end

Miden REPL

The Miden Read–eval–print loop (REPL) is a Miden shell that allows for quick and easy debugging of Miden assembly. After the REPL gets initialized, you can execute any Miden instruction, undo executed instructions, check the state of the stack and memory at a given point, and do many other useful things! When the REPL is exited, a history.txt file is saved. One thing to note is that all the REPL native commands start with an ! to differentiate them from regular assembly instructions.

Miden REPL can be started via the CLI repl command like so:

./target/optimized/miden repl

It is also possible to initialize REPL with libraries. To create it with Miden standard library you need to specify -s or --stdlib subcommand, it is also possible to add a third-party library by specifying -l or --libraries subcommand with paths to .masl library files. For example:

./target/optimized/miden repl -s -l example/library.masl

Miden assembly instruction

All Miden instructions mentioned in the Miden Assembly sections are valid. One can either input instructions one by one or multiple instructions in one input.

For example, the below two commands will result in the same output.

>> push.1

>> push.2

>> push.3

push.1 push.2 push.3

To execute a control flow operation, one must write the entire statement in a single line with spaces between individual operations.

repeat.20

pow2

end

The above example should be written as follows in the REPL tool:

repeat.20 pow2 end

!help

The !help command prints out all the available commands in the REPL tool.

!program

The !program command prints out the entire Miden program being executed. E.g., in the below scenario:

>> push.1.2.3.4

>> repeat.16 pow2 end

>> u32wrapping_add

>> !program

begin

push.1.2.3.4

repeat.16 pow2 end

u32wrapping_add

end

!stack

The !stack command prints out the state of the stack at the last executed instruction. Since the stack always contains at least 16 elements, 16 or more elements will be printed out (even if all of them are zeros).

>> push.1 push.2 push.3 push.4 push.5

>> exp

>> u32wrapping_mul

>> swap

>> eq.2

>> assert

The !stack command will print out the following state of the stack:

>> !stack

3072 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

!mem

The !mem command prints out the contents of all initialized memory locations. For each such location, the address, along with its memory values, is printed. Recall that four elements are stored at each memory address.

If the memory has at least one value that has been initialized:

>> !mem

7: [1, 2, 0, 3]

8: [5, 7, 3, 32]

9: [9, 10, 2, 0]

If the memory is not yet been initialized:

>> !mem

The memory has not been initialized yet

!mem[addr]

The !mem[addr] command prints out memory contents at the address specified by addr.

If the addr has been initialized:

>> !mem[9]

9: [9, 10, 2, 0]

If the addr has not been initialized:

>> !mem[87]

Memory at address 87 is empty

!use

The !use command prints out the list of all modules available for import.

If the stdlib was added to the available libraries list !use command will print all its modules:

>> !use

Modules available for importing:

std::collections::mmr

std::collections::smt

...

std::mem

std::sys

std::utils

Using the !use command with a module name will add the specified module to the program imports:

>> !use std::math::u64

>> !program

use.std::math::u64

begin

end

!undo

The !undo command reverts to the previous state of the stack and memory by dropping off the last executed assembly instruction from the program. One could use !undo as often as they want to restore the state of a stack and memory instructions ago (provided there are instructions in the program). The !undo command will result in an error if no remaining instructions are left in the Miden program.

>> push.1 push.2 push.3

>> push.4

>> !stack

4 3 2 1 0 0 0 0 0 0 0 0 0 0 0 0

>> push.5

>> !stack

5 4 3 2 1 0 0 0 0 0 0 0 0 0 0 0

>> !undo

4 3 2 1 0 0 0 0 0 0 0 0 0 0 0 0

>> !undo

3 2 1 0 0 0 0 0 0 0 0 0 0 0 0 0

User Documentation

In the following sections, we provide developer-focused documentation useful to those who want to develop on Miden VM or build compilers from higher-level languages to Miden VM.

This documentation consists of two high-level sections:

- Miden assembly which provides a detailed description of Miden assembly language, which is the native language of Miden VM.

- Miden Standard Library which provides descriptions of all procedures available in Miden Standard Library.

For info on how to run programs on Miden VM, please refer to the usage section in the introduction.

Miden Assembly

Miden assembly is a simple, low-level language for writing programs for Miden VM. It stands just above raw Miden VM instruction set, and in fact, many instructions of Miden assembly map directly to raw instructions of Miden VM.

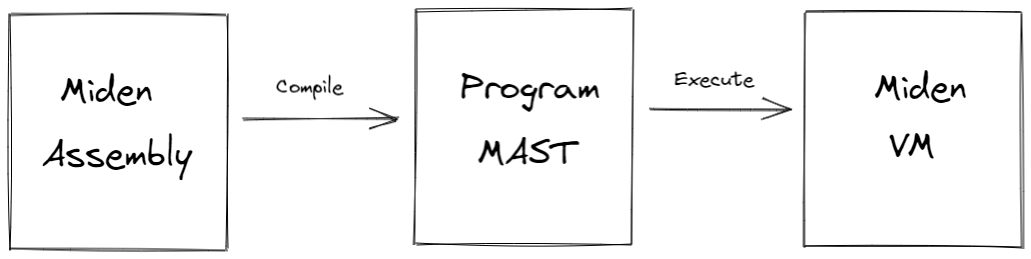

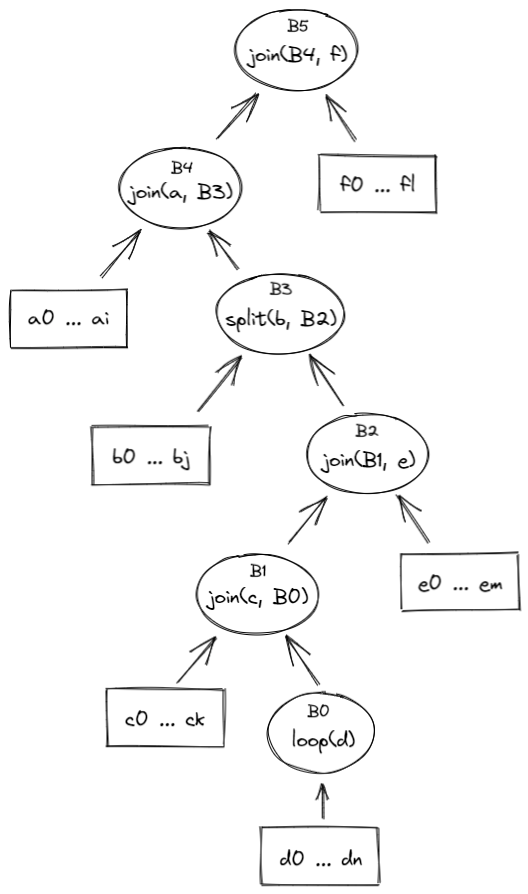

Before Miden assembly can be executed on Miden VM, it needs to be compiled into a Program MAST (Merkelized Abstract Syntax Tree) which is a binary tree of code blocks each containing raw Miden VM instructions.

As compared to raw Miden VM instructions, Miden assembly has several advantages:

- Miden assembly is intended to be a more stable external interface for the VM. That is, while we plan to make significant changes to the underlying VM to optimize it for stability, performance etc., we intend to make very few breaking changes to Miden assembly.

- Miden assembly natively supports control flow expressions which the assembler automatically transforms into a program MAST. This greatly simplifies writing programs with complex execution logic.

- Miden assembly supports macro instructions. These instructions expand into short sequences of raw Miden VM instructions making it easier to encode common operations.

- Miden assembly supports procedures. These are stand-alone blocks of code which the assembler inlines into program MAST at compile time. This improves program modularity and code organization.

The last two points also make Miden assembly much more concise as compared to the raw program MAST. This may be important in the blockchain context where pubic programs need to be stored on chain.

Terms and notations

In this document we use the following terms and notations:

- is the modulus of the VM's base field which is equal to .

- A binary value means a field element which is either or .

- Inequality comparisons are assumed to be performed on integer representations of field elements in the range .

Throughout this document, we use lower-case letters to refer to individual field elements (e.g., ). Sometimes it is convenient to describe operations over groups of elements. For these purposes we define a word to be a group of four elements. We use upper-case letters to refer to words (e.g., ). To refer to individual elements within a word, we use numerical subscripts. For example, is the first element of word , is the last element of word , etc.

Design goals

The design of Miden assembly tries to achieve the following goals:

- Miden assembly should be an easy compilation target for high-level languages.

- Programs written in Miden assembly should be readable, even if the code is generated by a compiler from a high-level language.

- Control flow should be easy to understand to help in manual inspection, formal verification, and optimization.

- Compilation of Miden assembly into Miden program MAST should be as straight-forward as possible.

- Serialization of Miden assembly into a binary representation should be as compact and as straight-forward as possible.

In order to achieve the first goal, Miden assembly exposes a set of native operations over 32-bit integers and supports linear read-write memory. Thus, from the stand-point of a higher-level language compiler, Miden VM can be viewed as a regular 32-bit stack machine with linear read-write memory.

In order to achieve the second and third goals, Miden assembly facilitates flow control via high-level constructs like while loops, if-else statements, and function calls with statically defined targets. Thus, for example, there are no explicit jump instructions.

In order to achieve the fourth goal, Miden assembly retains direct access to the VM stack rather than abstracting it away with higher-level constructs and named variables.

Lastly, in order to achieve the fifth goal, each instruction of Miden assembly can be encoded using a single byte. The resulting byte-code is simply a one-to-one mapping of instructions to their binary values.

Code organization

A Miden assembly program is just a sequence of instructions each describing a specific directive or an operation. You can use any combination of whitespace characters to separate one instruction from another.

In turn, Miden assembly instructions are just keywords which can be parameterized by zero or more parameters. The notation for specifying parameters is keyword.param1.param2 - i.e., the parameters are separated by periods. For example, push.123 instruction denotes a push operation which is parameterized by value 123.

Miden assembly programs are organized into procedures. Procedures, in turn, can be grouped into modules.

Procedures

A procedure can be used to encapsulate a frequently-used sequence of instructions which can later be invoked via a label. A procedure must start with a proc.<label>.<number of locals> instruction and terminate with an end instruction. For example:

proc.foo.2

<instructions>

end

A procedure label must start with a letter and can contain any combination of numbers, ASCII letters, and underscores (_). Should you need to represent a label with other characters, an extended set is permitted via quoted identifiers, i.e. an identifier surrounded by "..". Quoted identifiers additionally allow any alphanumeric letter (ASCII or UTF-8), as well as various common punctuation characters: !, ?, :, ., <, >, and -. Quoted identifiers are primarily intended for representing symbols/identifiers when compiling higher-level languages to Miden Assembly, but can be used anywhere that normal identifiers are expected.

The number of locals specifies the number of memory-based local field elements a procedure can access (via loc_load, loc_store, and other instructions). If a procedure doesn't need any memory-based locals, this parameter can be omitted or set to 0. A procedure can have at most locals, and the total number of locals available to all procedures at runtime is limited to . Note that the assembler internally always rounds up the number of declared locals to the nearest multiple of 4.

To execute a procedure, the exec.<label>, call.<label>, and syscall.<label> instructions can be used. For example:

exec.foo

The difference between using each of these instructions is explained in the next section.

A procedure may execute any other procedure, however recursion is not currently permitted, due to limitations imposed by the Merkalized Abstract Syntax Tree. Recursion is caught by static analysis of the call graph during assembly, so in general you don't need to think about this, but it is a limitation to be aware of. For example, the following code block defines a program with two procedures:

proc.bar

<instructions>

exec.foo

<instructions>

end

proc.foo

<instructions>

end

begin

<instructions>

exec.bar

<instructions>

exec.foo

end

Dynamic procedure invocation

It is also possible to invoke procedures dynamically - i.e., without specifying target procedure labels at compile time. A procedure can only call itself using dynamic invocation. There are two instructions, dynexec and dyncall, which can be used to execute dynamically-specified code targets. Both instructions expect the MAST root of the target to be stored in memory, and the memory address of the MAST root to be on the top of the stack. The difference between dynexec and dyncall corresponds to the difference between exec and call, see the documentation on procedure invocation semantics for more details.

Dynamic code execution in the same context is achieved by setting the top element of the stack to the memory address where the hash of the dynamic code block is stored, and then executing the dynexec or dyncall instruction. You can obtain the hash of a procedure in the current program, by name, using the procref instruction. See the following example of pairing the two:

# Retrieve the hash of `foo`, store it at `ADDR`, and push `ADDR` on top of the stack

procref.foo mem_storew.ADDR dropw push.ADDR

# Execute `foo` dynamically

dynexec

During assembly, the procref.foo instruction is compiled to a push.HASH, where HASH is the hash of the MAST root of the foo procedure.

During execution of the dynexec instruction, the VM does the following:

- Read the top stack element , and read the memory word starting at address (the hash of the dynamic target),

- Shift the stack left by one element,

- Load the code block referenced by the hash, or trap if no such MAST root is known,

- Execute the loaded code block.

The dyncall instruction is used the same way, with the difference that it involves a context switch to a new context when executing the referenced block, and switching back to the calling context once execution of the callee completes.

Modules

A module consists of one or more procedures. There are two types of modules: library modules and executable modules (also called programs).

Library modules

Library modules contain zero or more internal procedures and one or more exported procedures. For example, the following module defines one internal procedure (defined with proc instruction) and one exported procedure (defined with export instruction):

proc.foo

<instructions>

end

export.bar

<instructions>

exec.foo

<instructions>

end

Programs

Executable modules are used to define programs. A program contains zero or more internal procedures (defined with proc instruction) and exactly one main procedure (defined with begin instruction). For example, the following module defines one internal procedure and a main procedure:

proc.foo

<instructions>

end

begin

<instructions>

exec.foo

<instructions>

end

A program cannot contain any exported procedures.

When a program is executed, the execution starts at the first instruction following the begin instruction. The main procedure is expected to be the last procedure in the program and can be followed only by comments.

Importing modules

To reference items in another module, you must either import the module you wish to use, or specify a fully-qualified path to the item you want to reference.

To import a module, you must use the use keyword in the top level scope of the current module, as shown below:

use.std::math::u64

begin

...

end

In this example, the std::math::u64 module is imported as u64, the default "alias" for the imported module. You can specify a different alias like so:

use.std::math::u64->bigint

This would alias the imported module as bigint rather than u64. The alias is needed to reference items from the imported module, as shown below:

use.std::math::u64

begin

push.1.0

push.2.0

exec.u64::wrapping_add

end

You can also bypass imports entirely, and specify an absolute procedure path, which requires prefixing the path with ::. For example:

begin

push.1.0

push.2.0

exec.::std::math::u64::wrapping_add

end

In the examples above, we have been referencing the std::math::u64 module, which is a module in the Miden Standard Library. There are a number of useful modules there, that provide a variety of helpful functionality out of the box.

If the assembler does not know about the imported modules, assembly will fail. You can register modules with the assembler when instantiating it, either in source form, or precompiled form. See the miden-assembly docs for details. The assembler will use this information to resolve references to imported procedures during assembly.

Re-exporting procedures

A procedure defined in one module can be re-exported from a different module under the same or a different name. For example:

use.std::math::u64

export.u64::add

export.u64::mul->mul64

export.foo

<instructions>

end

In the module shown above, not only is the locally-defined procedure foo exported, but so are two procedures named add and mul64, whose implementations are defined in the std::math::u64 module.

Similar to procedure invocation, you can bypass the explicit import by specifying an absolute path, like so:

export.::std::math::u64::mul->mul64

Additionally, you may re-export a procedure using its MAST root, so long as you specify an alias:

export.0x0000..0000->mul64

In all of the forms described above, the actual implementation of the re-exported procedure is defined externally. Other modules which reference the re-exported procedure, will have those references resolved to the original procedure during assembly.

Constants

Miden assembly supports constant declarations. These constants are scoped to the module they are defined in and can be used as immediate parameters for Miden assembly instructions. Constants are supported as immediate values for many of the instructions in the Miden Assembly instruction set, see the documentation for specific instructions to determine whether or not it provides a form which accepts immediate operands.

Constants must be declared right after module imports and before any procedures or program bodies. A constant's name must start with an upper-case letter and can contain any combination of numbers, upper-case ASCII letters, and underscores (_). The number of characters in a constant name cannot exceed 100.

A constant's value must be in a decimal or hexadecimal form and be in the range between and (both inclusive). Value can be defined by an arithmetic expression using +, -, *, /, //, (, ) operators and references to the previously defined constants if it uses only decimal numbers. Here / is a field division and // is an integer division. Note that the arithmetic expression cannot contain spaces.

use.std::math::u64

const.CONSTANT_1=100

const.CONSTANT_2=200+(CONSTANT_1-50)

const.ADDR_1=3

begin

push.CONSTANT_1.CONSTANT_2

exec.u64::wrapping_add

mem_store.ADDR_1

end

Comments

Miden assembly allows annotating code with simple comments. There are two types of comments: single-line comments which start with a # (pound) character, and documentation comments which start with #! characters. For example:

#! This is a documentation comment

export.foo

# this is a comment

push.1

end

Documentation comments must precede a procedure declaration. Using them inside a procedure body is an error.

Execution contexts

Miden assembly program execution can span multiple isolated contexts. An execution context defines its own memory space which is not accessible from other execution contexts.

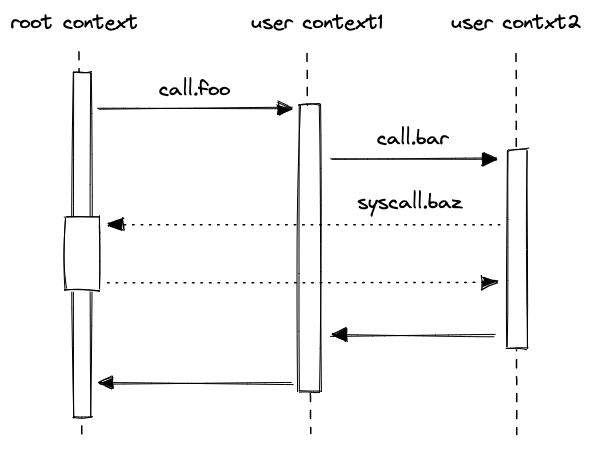

All programs start executing in a root context. Thus, the main procedure of a program is always executed in the root context. To move execution into a different context, we can invoke a procedure using the call instruction. In fact, any time we invoke a procedure using the call instruction, the procedure is executed in a new context. We refer to all non-root contexts as user contexts.

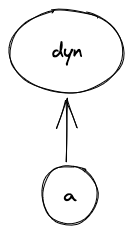

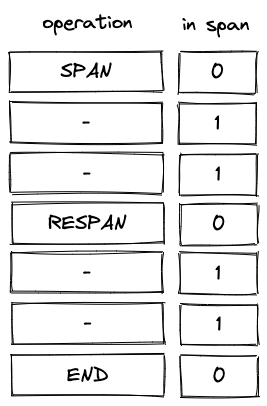

While executing in a user context, we can request to execute some procedures in the root context. This can be done via the syscall instruction. The set of procedures which can be invoked via the syscall instruction is limited by the kernel against which a program is compiled. Once the procedure called via syscall returns, the execution moves back to the user context from which it was invoked. The diagram below illustrates this graphically:

Procedure invocation semantics

As mentioned in the previous section, procedures in Miden assembly can be invoked via five different instructions: exec, call, syscall, dynexec, and dyncall. Invocation semantics of call, dyncall, and syscall instructions are basically the same, the only difference being that the syscall instruction can be used only with procedures which are defined in the program's kernel. The exec and dynexec instructions are different, and we explain these differences below.

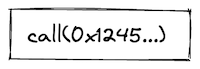

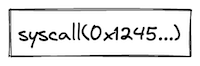

Invoking via call, dyncall, and syscall instructions

When a procedure is invoked via a call, dyncall, or a syscall instruction, the following happens:

- Execution moves into a different context. In case of the

callanddyncallinstructions, a new user context is created. In case of asyscallinstruction, the execution moves back into the root context. - All stack items beyond the 16th item get "hidden" from the invoked procedure. That is, from the standpoint of the invoked procedure, the initial stack depth is set to 16.

- Note that for

dyncall, the stack is shifted left by one element before being set to 16.

- Note that for

When the callee returns, the following happens:

- The execution context of the caller is restored

- If the original stack depth was greater than 16, those elements that were "hidden" during the call as described above, are restored. However, the stack depth must be exactly 16 elements when the procedure returns, or this will fail and the VM will trap.

The manipulations of the stack depth described above have the following implications:

- The top 16 elements of the stack can be used to pass parameters and return values between the caller and the callee.

- Caller's stack beyond the top 16 elements is inaccessible to the callee, and thus, is guaranteed not to change as the result of the call.

- As mentioned above, in the case of

dyncall, the elements at indices 1 to 17 at the call site will be accessible to the callee (shifted to indices 0 to 16)

- As mentioned above, in the case of

- At the end of its execution, the callee must ensure that stack depth is exactly 16. If this is difficult to ensure manually, the

truncate_stackprocedure can be used to drop all elements from the stack except for the top 16.

Invoking via exec instruction

The exec instruction can be thought of as the "normal" way of invoking procedures, i.e. it has semantics that would be familiar to anyone coming from a standard programming language, or that is familiar with procedure call instructions in a typical assembly language.

In Miden Assembly, it is used to execute procedures without switching execution contexts, i.e. the callee executes in the same context as the caller. Conceptually, invoking a procedure via exec behaves as if the body of that procedure was inlined at the call site. In practice, the procedure may or may not be actually inlined, based on compiler optimizations around code size, but there is no actual performance tradeoff in the usual sense. Thus, when executing a program, there is no meaningful difference between executing a procedure via exec, or replacing the exec with the body of the procedure.

Kernels

A kernel defines a set of procedures which can be invoked from user contexts to be executed in the root context. Miden assembly programs are always compiled against some kernel. The default kernel is empty - i.e., it does not contain any procedures. To compile a program against a non-empty kernel, the kernel needs to be specified when instantiating the Miden Assembler.

A kernel can be defined similarly to a regular library module - i.e., it can have internal and exported procedures. However, there are some small differences between what procedures can do in a kernel module vs. what they can do in a regular library module. Specifically:

- Procedures in a kernel module cannot use

call,dyncallorsyscallinstructions. This means that creating a new context from within asyscallis not possible. - Unlike procedures in regular library modules, procedures in a kernel module can use the

callerinstruction. This instruction puts the hash of the procedure which initiated the parent context onto the stack.

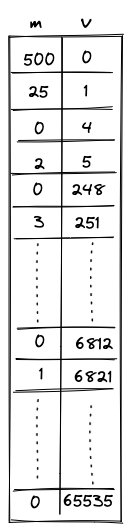

Memory layout

As mentioned earlier, procedures executed within a given context can access memory only of that context. This is true for both memory reads and memory writes.

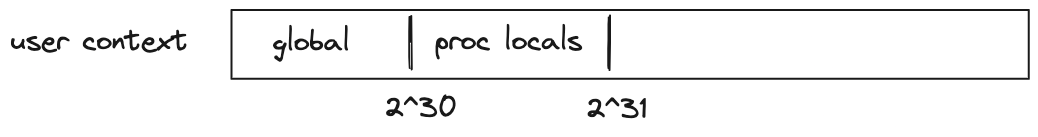

Address space of every context is the same: the smallest accessible address is and the largest accessible address is . Any code executed in a given context has access to its entire address space. However, by convention, we assign different meanings to different regions of the address space.

For user contexts we have the following:

- The first addresses are assumed to be global memory.

- The next addresses are reserved for memory locals of procedures executed in the same context (i.e., via the

execinstruction). - The remaining address space has no special meaning.

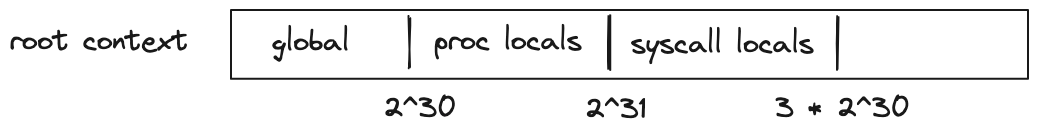

For the root context we have the following:

- The first addresses are assumed to be global memory.

- The next addresses are reserved for memory locals of procedures executed in the root context.

- The next addresses are reserved for memory locals of procedures executed from within a

syscall. - The remaining address space has no special meaning.

For both types of contexts, writing directly into regions of memory reserved for procedure locals is not advisable. Instead, loc_load, loc_store and other similar dedicated instructions should be used to access procedure locals.

Example

To better illustrate what happens as we execute procedures in different contexts, let's go over the following example.

kernel

--------------------

export.baz.2

<instructions>

caller

<instructions>

end

program

--------------------

proc.bar.1

<instructions>

syscall.baz

<instructions>

end

proc.foo.3

<instructions>

call.bar

<instructions>

exec.bar

<instructions>

end

begin

<instructions>

call.foo

<instructions>

end

Execution of the above program proceeds as follows:

- The VM starts executing instructions immediately following the

beginstatement. These instructions are executed in the root context (let's call this contextctx0). - When

call.foois executed, a new context is created (ctx1). Memory in this context is isolated fromctx0. Additionally, any elements on the stack beyond the top 16 are hidden fromfoo. - Instructions executed inside

foocan access memory ofctx1only. The address of the first procedure local infoo(e.g., accessed vialoc_load.0) is . - When

call.baris executed, a new context is created (ctx2). The stack depth is set to 16 again, and any instruction executed in this context can access memory ofctx2only. The first procedure local ofbaris also located at address . - When

syscall.bazis executed, the execution moves back into the root context. That is, instructions executed insidebazhave access to the memory ofctx0. The first procedure local ofbazis located at address . Whenbazstarts executing, the stack depth is again set to 16. - When

calleris executed insidebaz, the first 4 elements of the stack are populated with the hash ofbarsincebazwas invoked frombar's context. - Once

bazreturns, execution moves back toctx2, and then, whenbarreturns, execution moves back toctx1. We assume that instructions executed right before each procedure returns ensure that the stack depth is exactly 16 right before procedure's end. - Next, when

exec.baris executed,baris executed again, but this time it is executed in the same context asfoo. Thus, it can access memory ofctx1. Moreover, the stack depth is not changed, and thus,barcan access the entire stack offoo. Lastly, this first procedure local ofbarnow will be at address (since the first 3 locals in this context are reserved forfoo). - When

syscall.bazis executed the second time, execution moves into the root context again. However, now, whencalleris executed insidebaz, the first 4 elements of the stack are populated with the hash offoo(notbar). This happens because this time aroundbardoes not have its own context andbazis invoked fromfoo's context. - Finally, when

bazreturns, execution moves back toctx1, and then asbarandfooreturn, back toctx0, and the program terminates.

Flow control

As mentioned above, Miden assembly provides high-level constructs to facilitate flow control. These constructs are:

- if-else expressions for conditional execution.

- repeat expressions for bounded counter-controlled loops.

- while expressions for unbounded condition-controlled loops.

Conditional execution

Conditional execution in Miden VM can be accomplished with if-else statements. These statements can take one of the following forms:

if.true .. else .. end

This is the full form, when there is work to be done on both branches:

if.true

..instructions..

else

..instructions..

end

if.true .. end

This is the abbreviated form, for when there is only work to be done on one branch. In these cases the "unused" branch can be elided:

if.true

..instructions..

end

In addition to if.true, there is also if.false, which is identical in syntax, but for false-conditioned branches. It is equivalent in semantics to using if.true and swapping the branches.

The body of each branch, i.e. ..instructions.. in the examples above, can be a sequence of zero or more instructions (an empty body is only valid so long as at least one branch is non-empty). These can consist of any instruction, including nested control flow.

tip

As with other control structures described below that have nested blocks,

it is essential that you ensure that the state of the operand stack is

consistent at join points in control flow. For example, with if.true

control flow implicitly joins at the end of each branch. If you have moved

items around on the operand stack, or added/removed items, and those

modifications would persist past the end of that branch, it is highly

recommended that you make equivalent modifications in the opposite branch.

This is not required if modifications are local to a block.

The semantics of the if.true and if.false control operator are as follows:

- The condition is popped from the top of the stack. It must be a boolean value, i.e. for false, for true. If the condition is not a boolean value, then execution traps.

- The conditional branch is chosen:

a. If the operator is

if.true, and the condition is true, instructions in the first branch are executed; otherwise, if the condition is false, then the second branch is executed. If a branch was elided or empty, the assembler provides a default body consisting of a singlenopinstruction. b. If the operator isif.false, the behavior is identical to that ofif.true, except the condition must be false for the first branch to be taken, and true for the second branch. - Control joins at the next instruction immediately following the

if.true/if.falseinstruction.

tip

A note on performance: using if-else statements incurs a small, but non-negligible overhead. Thus, for simple conditional statements, it may be more efficient to compute the result of both branches, and then select the result using conditional drop instructions.

This does not apply to if-else statements whose bodies contain side-effects that cannot be easily adapted to this type of rewrite. For example, writing a value to global memory is a side effect, but if both branches would write to the same address, and only the value being written differs, then this can likely be rewritten to use cdrop.

Counter-controlled loops

Executing a sequence of instructions a predefined number of times can be accomplished with repeat statements. These statements look like so:

repeat.<count>

<instructions>

end

where:

instructionscan be a sequence of any instructions, including nested control structures.countis the number of times theinstructionssequence should be repeated (e.g.repeat.10).countmust be an integer or a constant greater than .

Note: During compilation the

repeat.<count>blocks are unrolled and expanded into<count>copies of its inner block, there is no additional cost for counting variables in this case.

Condition-controlled loops

Executing a sequence of instructions zero or more times based on some condition can be accomplished with while loop expressions. These expressions look like so:

while.true

<instructions>

end

where instructions can be a sequence of any instructions, including nested control structures. The above does the following:

- Pops the top item from the stack.

- If the value of the item is ,

instructionsin the loop body are executed.- After the body is executed, the stack is popped again, and if the popped value is , the body is executed again.

- If the popped value is , the loop is exited.

- If the popped value is not binary, the execution fails.

- If the value of the item is , execution of loop body is skipped.

- If the value is not binary, the execution fails.

Example:

# push the boolean true to the stack

push.1

# pop the top element of the stack and loop while it is true

while.true

# push the boolean false to the stack, finishing the loop for the next iteration

push.0

end

No-op

While rare, there may be situations where you have an empty block and require a do-nothing placeholder instruction, or where you specifically want to advance the cycle counter without any side-effects. The nop instruction can be used in these instances.

if.true

nop

else

..instructions..

end

In the example above, we do not want to perform any work if the condition is true, so we place a nop in that branch. This explicit representation of "empty" blocks is automatically done by the assembler when parsing if.true or if.false in abbreviated form, or when one of the branches is empty.

The semantics of this instruction are to increment the cycle count, and that is it - no other effects.

Field operations

Miden assembly provides a set of instructions which can perform operations with raw field elements. These instructions are described in the tables below.

While most operations place no restrictions on inputs, some operations expect inputs to be binary values, and fail if executed with non-binary inputs.

For instructions where one or more operands can be provided as immediate parameters (e.g., add and add.b), we provide stack transition diagrams only for the non-immediate version. For the immediate version, it can be assumed that the operand with the specified name is not present on the stack.

Assertions and tests

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

| assert - (1 cycle) | [a, ...] | [...] | If , removes it from the stack. Fails if |

| assertz - (2 cycles) | [a, ...] | [...] | If , removes it from the stack, Fails if |

| assert_eq - (2 cycles) | [b, a, ...] | [...] | If , removes them from the stack. Fails if |

| assert_eqw - (11 cycles) | [B, A, ...] | [...] | If , removes them from the stack. Fails if |

The above instructions can also be parametrized with an error code which can be any 32-bit value specified either directly or via a named constant. For example:

assert.err=123

assert.err=MY_CONSTANT

If the error code is omitted, the default value of is assumed.

Arithmetic and Boolean operations

The arithmetic operations below are performed in a 64-bit prime field defined by modulus . This means that overflow happens after a value exceeds . Also, the result of divisions may appear counter-intuitive because divisions are defined via inversions.

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

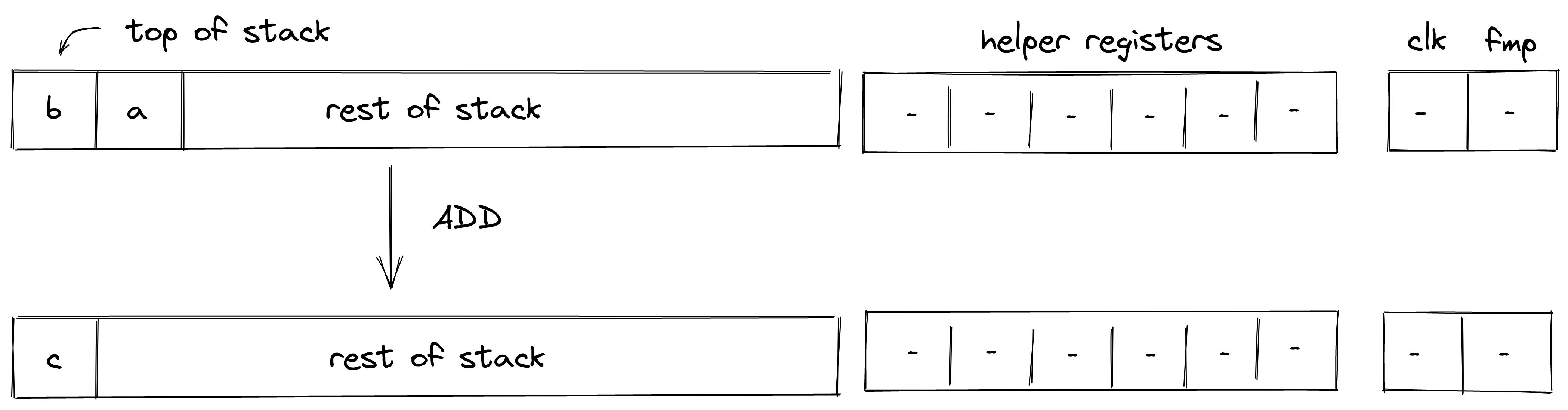

| add - (1 cycle) add.b - (1-2 cycle) | [b, a, ...] | [c, ...] | |

| sub - (2 cycles) sub.b - (2 cycles) | [b, a, ...] | [c, ...] | |

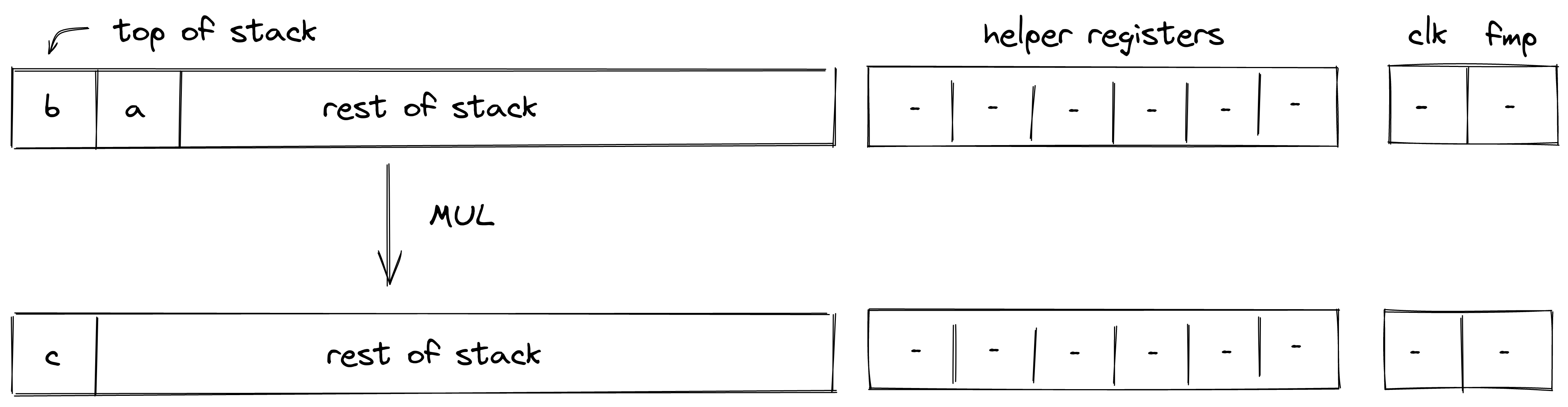

| mul - (1 cycle) mul.b - (2 cycles) | [b, a, ...] | [c, ...] | |

| div - (2 cycles) div.b - (2 cycles) | [b, a, ...] | [c, ...] | Fails if |

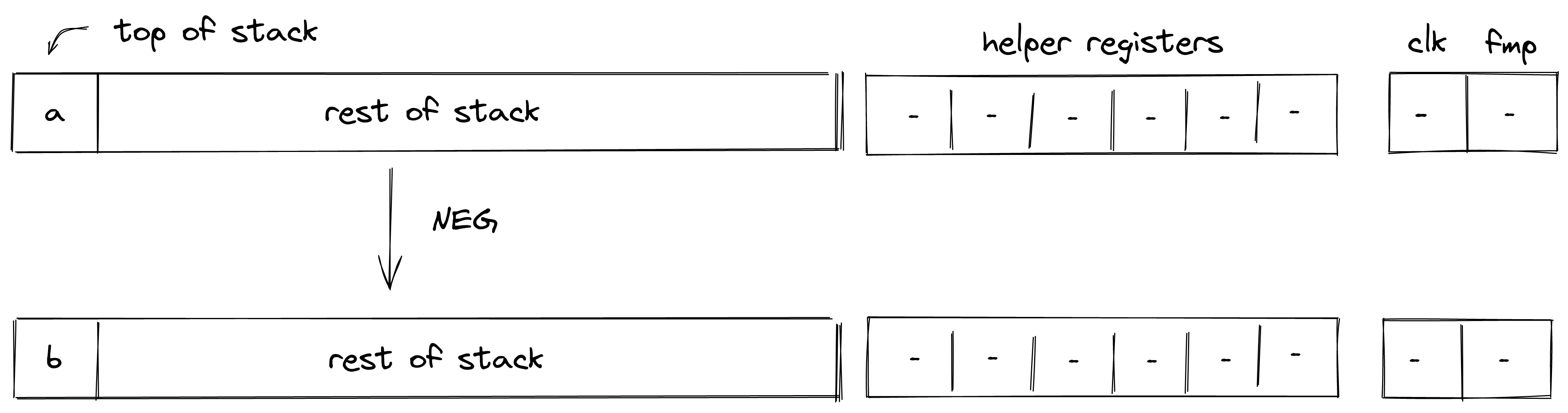

| neg - (1 cycle) | [a, ...] | [b, ...] | |

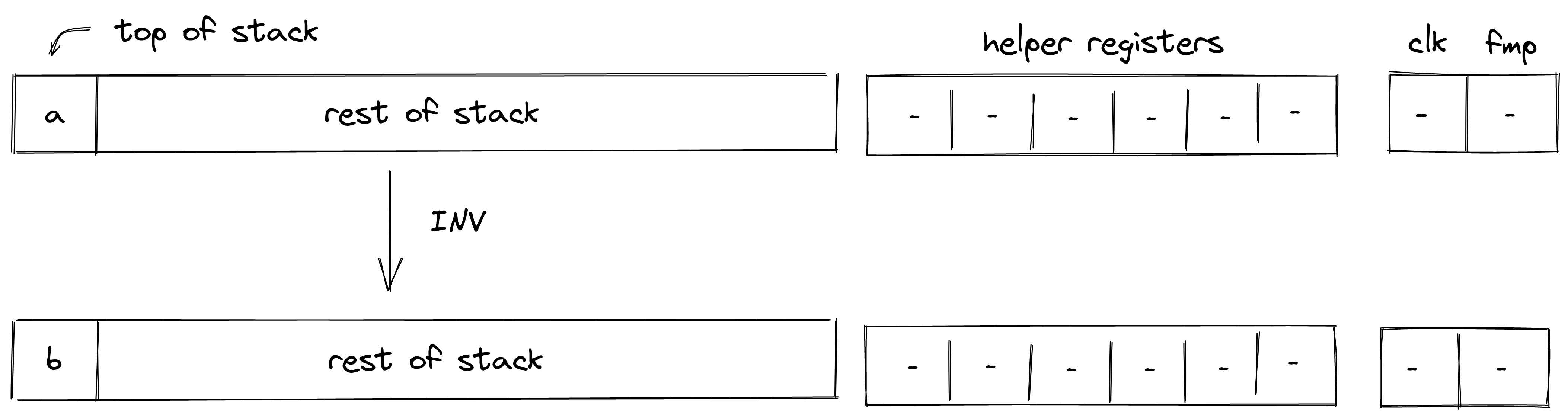

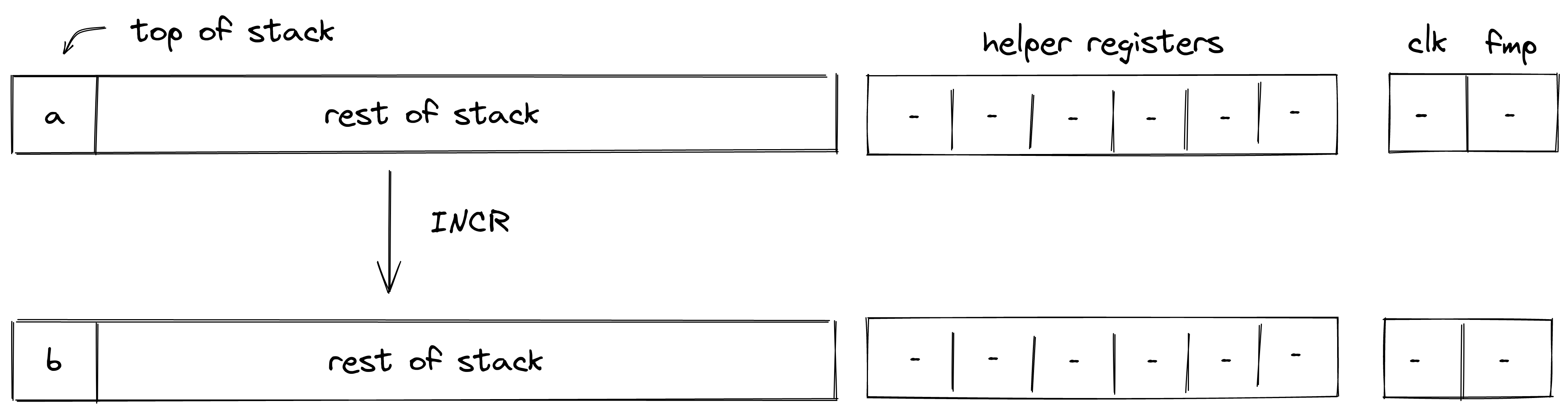

| inv - (1 cycle) | [a, ...] | [b, ...] | Fails if |

| pow2 - (16 cycles) | [a, ...] | [b, ...] | Fails if |

| exp.uxx - (9 + xx cycles) exp.b - (9 + log2(b) cycles) | [b, a, ...] | [c, ...] | Fails if xx is outside [0, 63) exp is equivalent to exp.u64 and needs 73 cycles |

| ilog2 - (44 cycles) | [a, ...] | [b, ...] | Fails if |

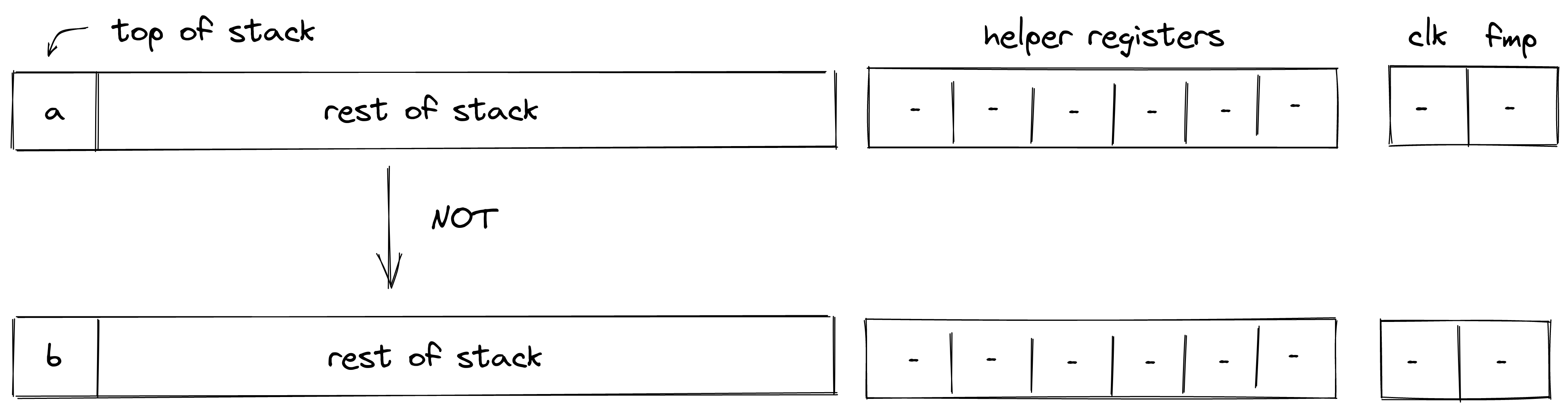

| not - (1 cycle) | [a, ...] | [b, ...] | Fails if |

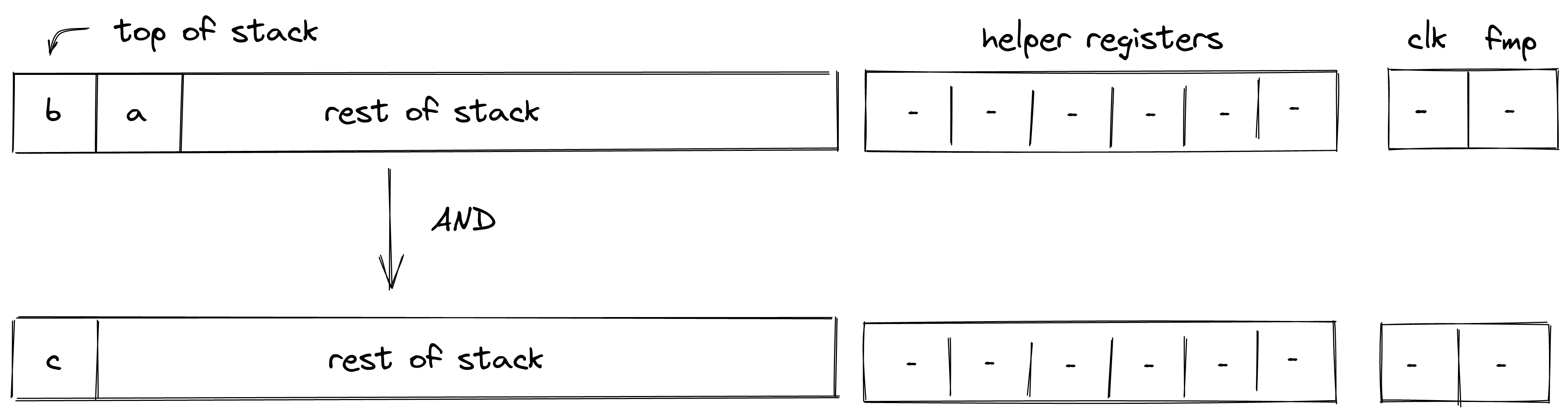

| and - (1 cycle) | [b, a, ...] | [c, ...] | Fails if |

| or - (1 cycle) | [b, a, ...] | [c, ...] | Fails if |

| xor - (7 cycles) | [b, a, ...] | [c, ...] | Fails if |

Comparison operations

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

| eq - (1 cycle) eq.b - (1-2 cycles) | [b, a, ...] | [c, ...] | |

| neq - (2 cycle) neq.b - (2-3 cycles) | [b, a, ...] | [c, ...] | |

| lt - (14 cycles) lt.b - (15 cycles) | [b, a, ...] | [c, ...] | |

| lte - (15 cycles) lte.b - (16 cycles) | [b, a, ...] | [c, ...] | |

| gt - (15 cycles) gt.b - (16 cycles) | [b, a, ...] | [c, ...] | |

| gte - (16 cycles) gte.b - (17 cycles) | [b, a, ...] | [c, ...] | |

| is_odd - (5 cycles) | [a, ...] | [b, ...] | |

| eqw - (15 cycles) | [A, B, ...] | [c, A, B, ...] |

Extension Field Operations

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

| ext2add - (5 cycles) | [b1, b0, a1, a0, ...] | [c1, c0, ...] | and |

| ext2sub - (7 cycles) | [b1, b0, a1, a0, ...] | [c1, c0, ...] | and |

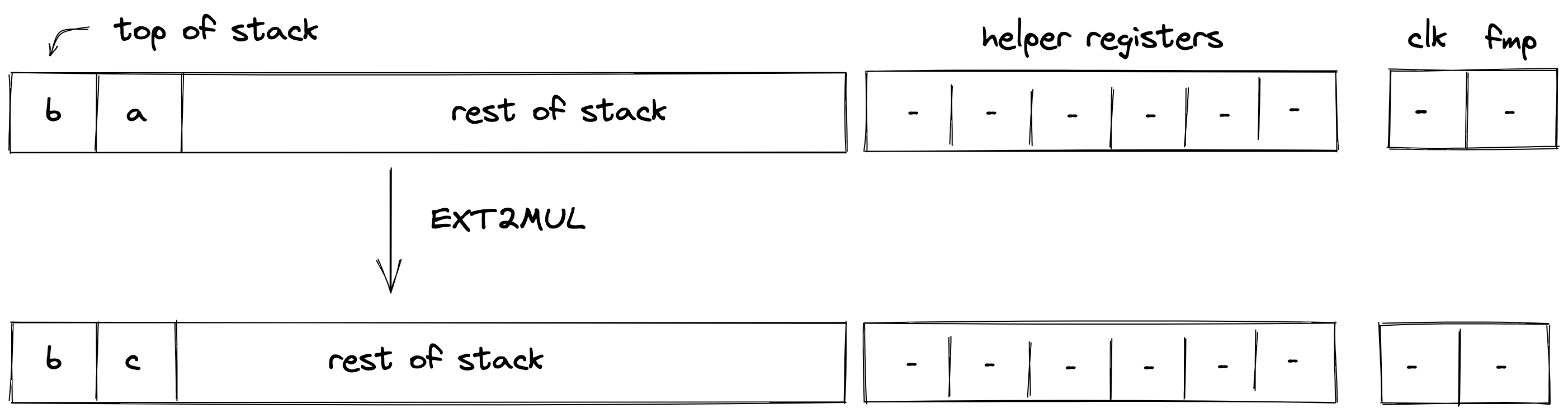

| ext2mul - (3 cycles) | [b1, b0, a1, a0, ...] | [c1, c0, ...] | and |

| ext2neg - (4 cycles) | [a1, a0, ...] | [a1', a0', ...] | and |

| ext2inv - (8 cycles) | [a1, a0, ...] | [a1', a0', ...] | Fails if |

| ext2div - (11 cycles) | [b1, b0, a1, a0, ...] | [c1, c0,] | fails if , where multiplication and inversion are as defined by the operations above |

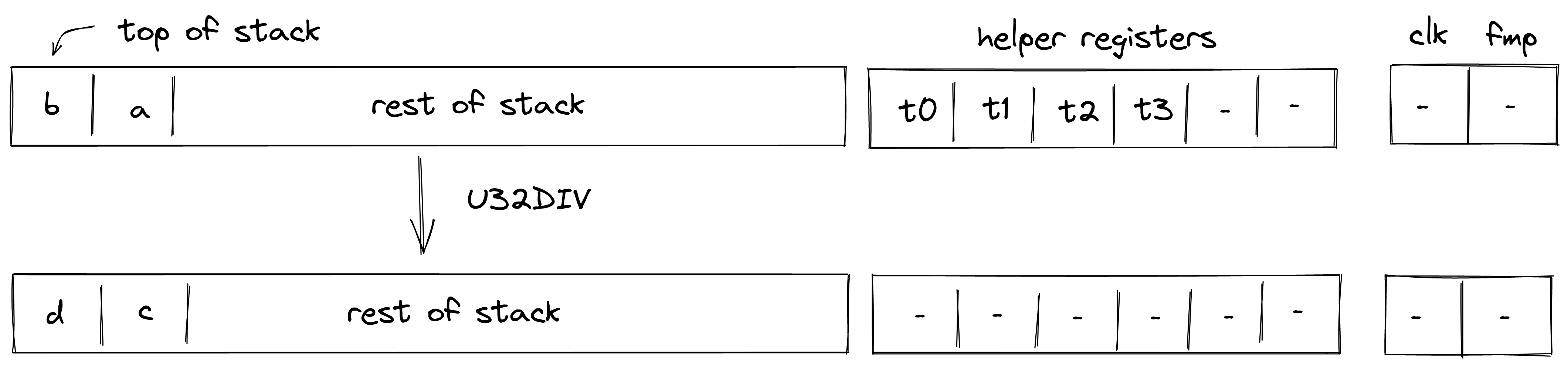

u32 operations

Miden assembly provides a set of instructions which can perform operations on regular two-complement 32-bit integers. These instructions are described in the tables below.

For instructions where one or more operands can be provided as immediate parameters (e.g., u32wrapping_add and u32wrapping_add.b), we provide stack transition diagrams only for the non-immediate version. For the immediate version, it can be assumed that the operand with the specified name is not present on the stack.

In all the table below, the number of cycles it takes for the VM to execute each instruction is listed beneath the instruction.

Notes on Undefined Behavior

Most of the instructions documented below expect to receive operands whose values are valid u32

values, i.e. values in the range . Currently, the semantics of the instructions

when given values outside of that range are undefined (as noted in the documented semantics for

each instruction). The rule with undefined behavior generally speaking is that you can make no

assumptions about what will happen if your program exhibits it.

For purposes of describing the effects of undefined behavior below, we will refer to values which

are not valid for the input type of the affected operation, e.g. u32, as poison. Any use of a

poison value propagates the poison state. For example, performing u32div with a poison operand,

can be considered as producing a poison value as its result, for the purposes of discussing

undefined behavior semantics.

With that in mind, there are two ways in which the effects of undefined behavior manifest:

Executor Semantics

From an executor perspective, currently, the semantics are completely undefined. An executor can do everything from terminate the program, panic, always produce 42 as a result, produce a random result, or something more principled.

In practice, the Miden VM, when executing an operation, will almost always trap on poison values. This is not guaranteed, but is currently the case for most operations which have niches of undefined behavior. To the extent that some other behavior may occur, it will generally be to truncate/wrap the poison value, but this is subject to change at any time, and is undocumented. You should assume that all operations will trap on poison.

The reason the Miden VM makes the choice to trap on poison, is to ensure that undefined behavior is caught close to the source, rather than propagated silently throughout the program. It also has the effect of ensuring you do not execute a program with undefined behavior, and produce a proof that is not actually valid, as we will describe in a moment.

Verifier Semantics

From the perspective of the verifier, the implementation details of the executor are completely unknown. For example, the fact that the Miden VM traps on poison values is not actually verified by constraints. An alternative executor implementation could choose not to trap, and thus appear to execute successfully. The resulting proof, however, as a result of the program exhibiting undefined behavior, is not a valid proof. In effect the use of poison values "poisons" the proof as well.

As a result, a program that exhibits undefined behavior, and executes successfully, will produce a proof that could pass verification, even though it should not. In other words, the proof does not prove what it says it does.

In the future, we may attempt to remove niches of undefined behavior in such a way that producing such invalid proofs is not possible, but for the time being, you must ensure that your program does not exhibit (or rely on) undefined behavior.

Conversions and tests

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

| u32test - (5 cycles) | [a, ...] | [b, a, ...] | |

| u32testw - (23 cycles) | [A, ...] | [b, A, ...] | |

| u32assert - (3 cycles) | [a, ...] | [a, ...] | Fails if |

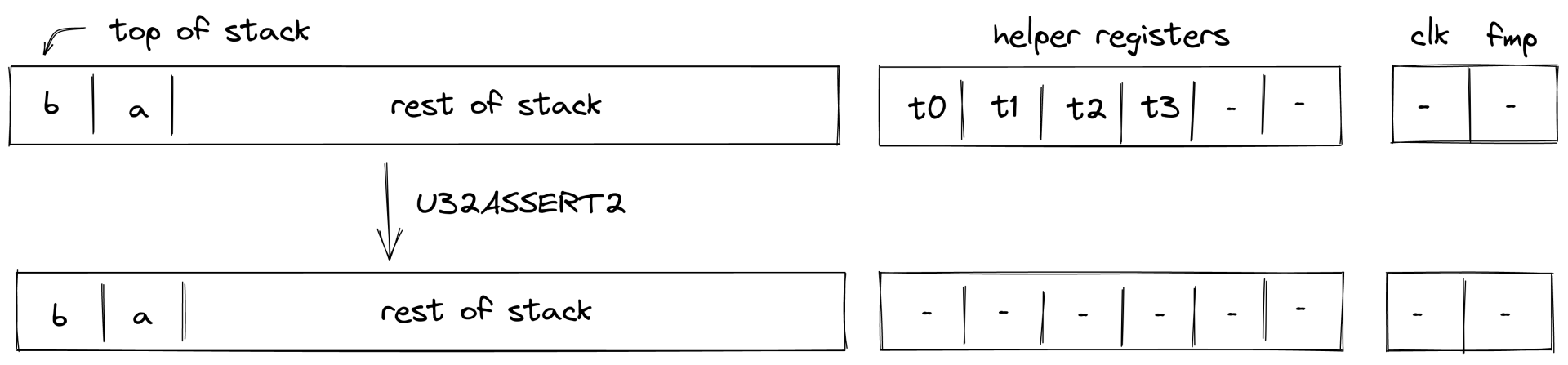

| u32assert2 - (1 cycle) | [b, a,...] | [b, a,...] | Fails if or |

| u32assertw - (6 cycles) | [A, ...] | [A, ...] | Fails if |

| u32cast - (2 cycles) | [a, ...] | [b, ...] | |

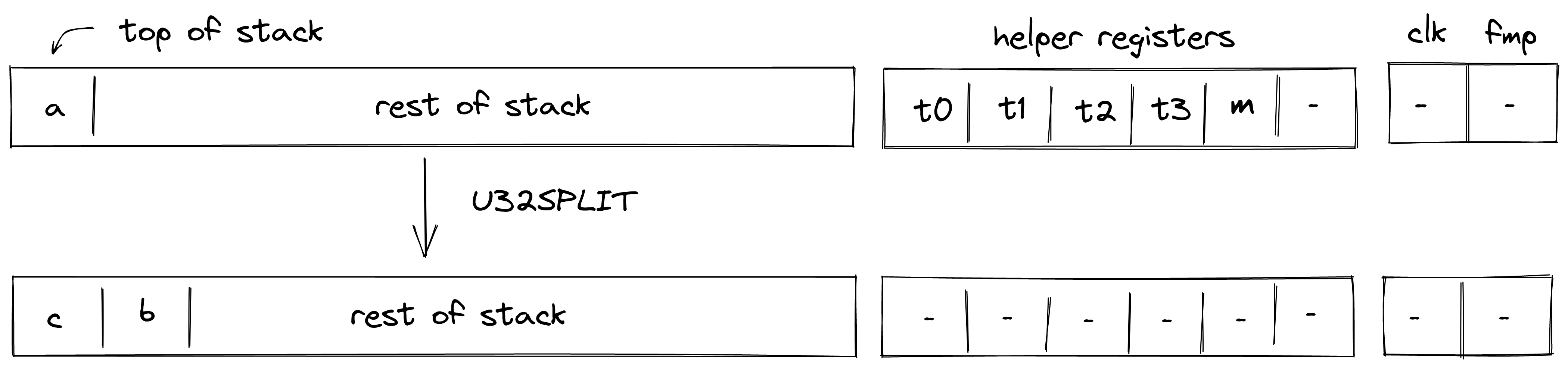

| u32split - (1 cycle) | [a, ...] | [c, b, ...] | , |

The instructions u32assert, u32assert2 and u32assertw can also be parametrized with an error code which can be any 32-bit value specified either directly or via a named constant. For example:

u32assert.err=123

u32assert.err=MY_CONSTANT

If the error code is omitted, the default value of is assumed.

Arithmetic operations

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

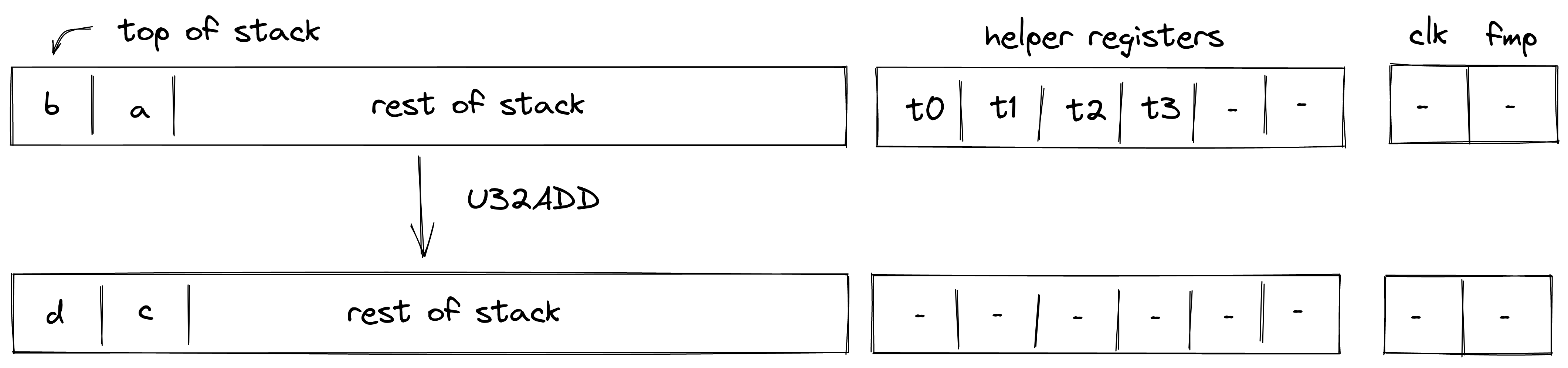

| u32overflowing_add - (1 cycle) u32overflowing_add.b - (2-3 cycles) | [b, a, ...] | [d, c, ...] | Undefined if |

| u32wrapping_add - (2 cycles) u32wrapping_add.b - (3-4 cycles) | [b, a, ...] | [c, ...] | Undefined if |

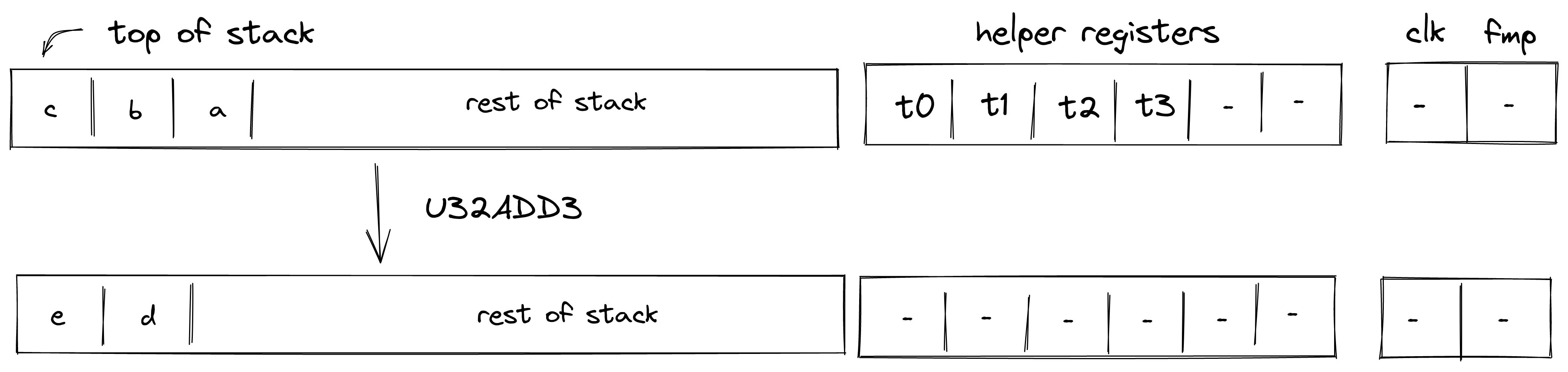

| u32overflowing_add3 - (1 cycle) | [c, b, a, ...] | [e, d, ...] | , Undefined if |

| u32wrapping_add3 - (2 cycles) | [c, b, a, ...] | [d, ...] | , Undefined if |

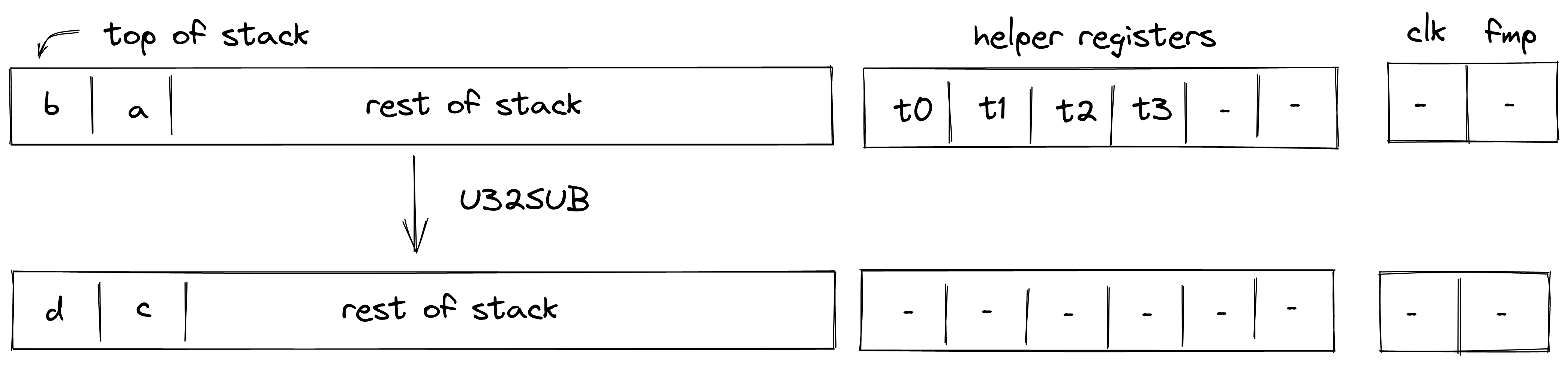

| u32overflowing_sub - (1 cycle) u32overflowing_sub.b - (2-3 cycles) | [b, a, ...] | [d, c, ...] | Undefined if |

| u32wrapping_sub - (2 cycles) u32wrapping_sub.b - (3-4 cycles) | [b, a, ...] | [c, ...] | Undefined if |

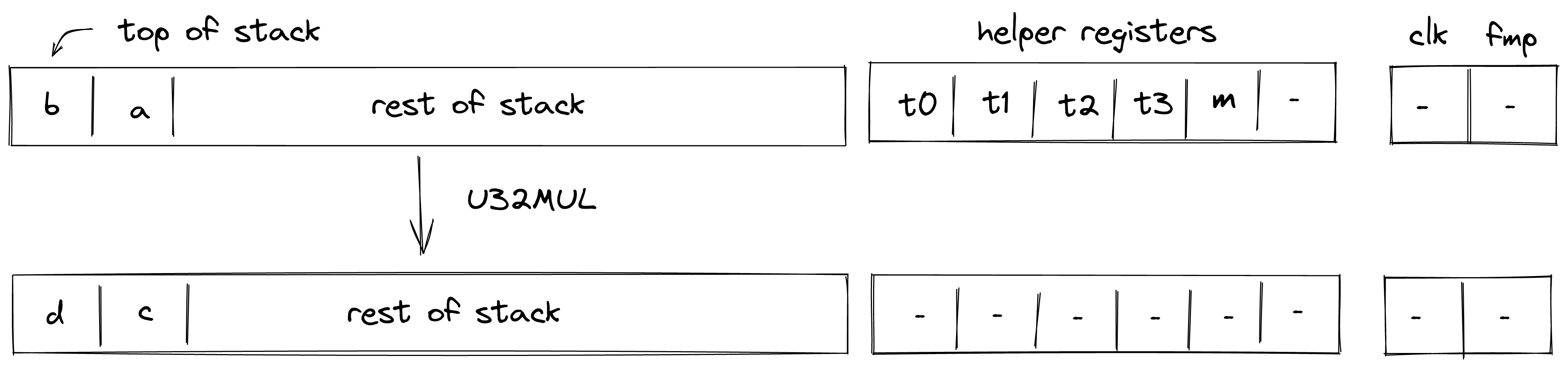

| u32overflowing_mul - (1 cycle) u32overflowing_mul.b - (2-3 cycles) | [b, a, ...] | [d, c, ...] | Undefined if |

| u32wrapping_mul - (2 cycles) u32wrapping_mul.b - (3-4 cycles) | [b, a, ...] | [c, ...] | Undefined if |

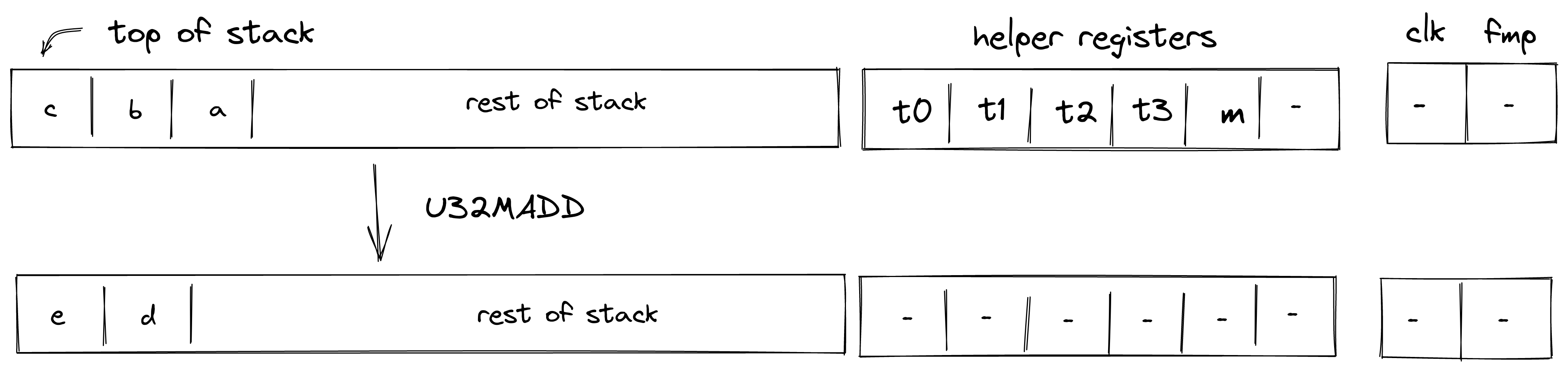

| u32overflowing_madd - (1 cycle) | [b, a, c, ...] | [e, d, ...] | Undefined if |

| u32wrapping_madd - (2 cycles) | [b, a, c, ...] | [d, ...] | Undefined if |

| u32div - (2 cycles) u32div.b - (3-4 cycles) | [b, a, ...] | [c, ...] | Fails if Undefined if |

| u32mod - (3 cycles) u32mod.b - (4-5 cycles) | [b, a, ...] | [c, ...] | Fails if Undefined if |

| u32divmod - (1 cycle) u32divmod.b - (2-3 cycles) | [b, a, ...] | [d, c, ...] | Fails if Undefined if |

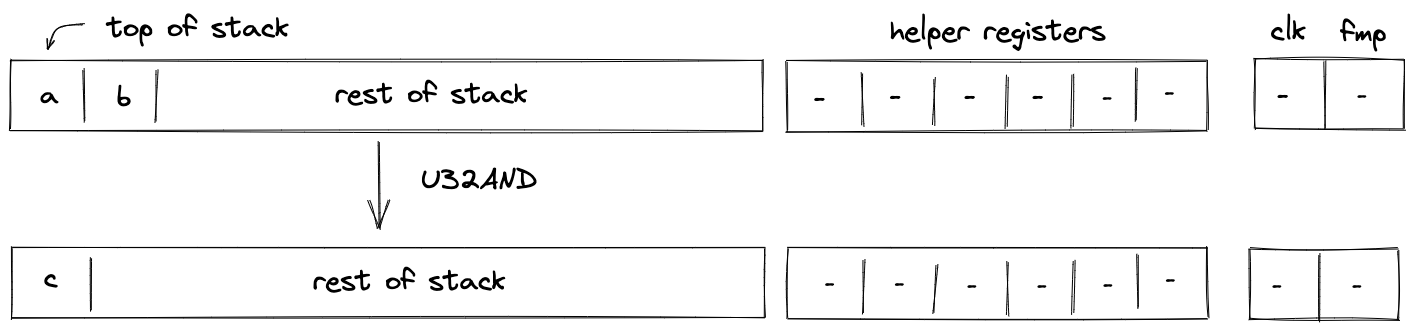

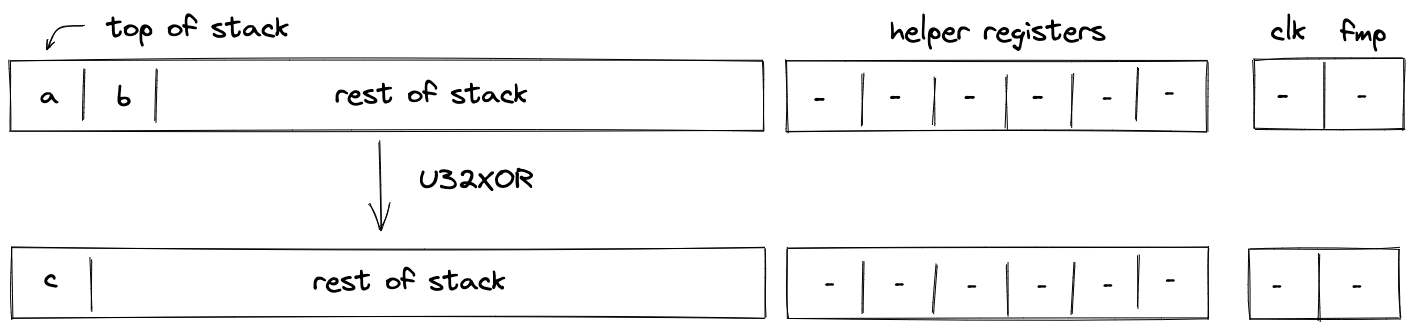

Bitwise operations

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

| u32and - (1 cycle) u32and.b - (2 cycles) | [b, a, ...] | [c, ...] | Computes as a bitwise AND of binary representations of and . Fails if |

| u32or - (6 cycle)s u32or.b - (7 cycles) | [b, a, ...] | [c, ...] | Computes as a bitwise OR of binary representations of and . Fails if |

| u32xor - (1 cycle) u32xor.b - (2 cycles) | [b, a, ...] | [c, ...] | Computes as a bitwise XOR of binary representations of and . Fails if |

| u32not - (5 cycles) u32not.a - (6 cycles) | [a, ...] | [b, ...] | Computes as a bitwise NOT of binary representation of . Fails if |

| u32shl - (18 cycles) u32shl.b - (3 cycles) | [b, a, ...] | [c, ...] | Undefined if or |

| u32shr - (18 cycles) u32shr.b - (3 cycles) | [b, a, ...] | [c, ...] | Undefined if or |

| u32rotl - (18 cycles) u32rotl.b - (3 cycles) | [b, a, ...] | [c, ...] | Computes by rotating a 32-bit representation of to the left by bits. Undefined if or |

| u32rotr - (23 cycles) u32rotr.b - (3 cycles) | [b, a, ...] | [c, ...] | Computes by rotating a 32-bit representation of to the right by bits. Undefined if or |

| u32popcnt - (33 cycles) | [a, ...] | [b, ...] | Computes by counting the number of set bits in (hamming weight of ). Undefined if |

| u32clz - (42 cycles) | [a, ...] | [b, ...] | Computes as a number of leading zeros of . Undefined if |

| u32ctz - (34 cycles) | [a, ...] | [b, ...] | Computes as a number of trailing zeros of . Undefined if |

| u32clo - (41 cycles) | [a, ...] | [b, ...] | Computes as a number of leading ones of . Undefined if |

| u32cto - (33 cycles) | [a, ...] | [b, ...] | Computes as a number of trailing ones of . Undefined if |

Comparison operations

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

| u32lt - (3 cycles) u32lt.b - (4 cycles) | [b, a, ...] | [c, ...] | Undefined if |

| u32lte - (5 cycles) u32lte.b - (6 cycles) | [b, a, ...] | [c, ...] | Undefined if |

| u32gt - (4 cycles) u32gt.b - (5 cycles) | [b, a, ...] | [c, ...] | Undefined if |

| u32gte - (4 cycles) u32gte.b - (5 cycles) | [b, a, ...] | [c, ...] | Undefined if |

| u32min - (8 cycles) u32min.b - (9 cycles) | [b, a, ...] | [c, ...] | Undefined if |

| u32max - (9 cycles) u32max.b - (10 cycles) | [b, a, ...] | [c, ...] | Undefined if |

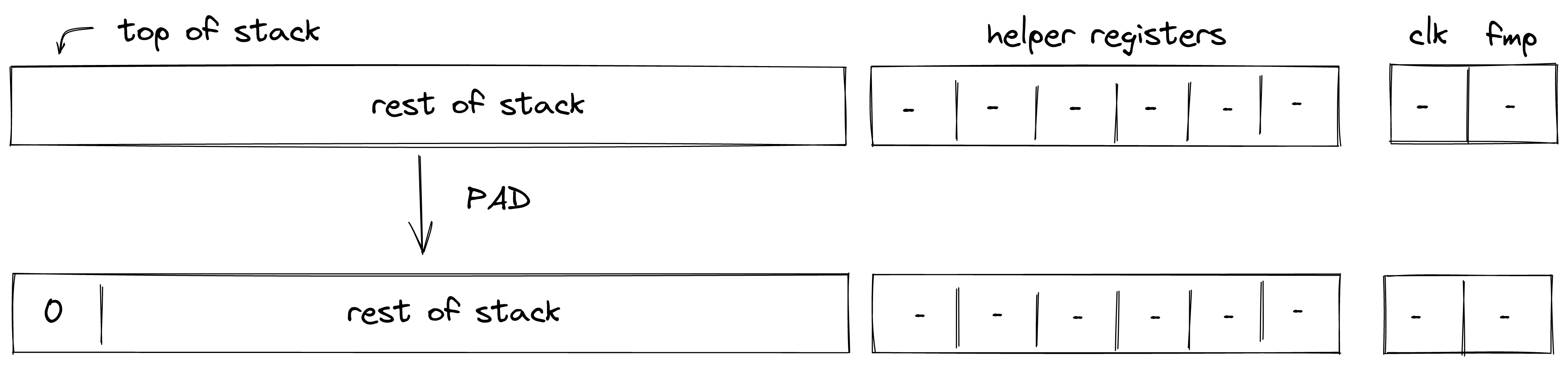

Stack manipulation

Miden VM stack is a push-down stack of field elements. The stack has a maximum depth of , but only the top elements are directly accessible via the instructions listed below.

In addition to the typical stack manipulation instructions such as drop, dup, swap etc., Miden assembly provides several conditional instructions which can be used to manipulate the stack based on some condition - e.g., conditional swap cswap or conditional drop cdrop.

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

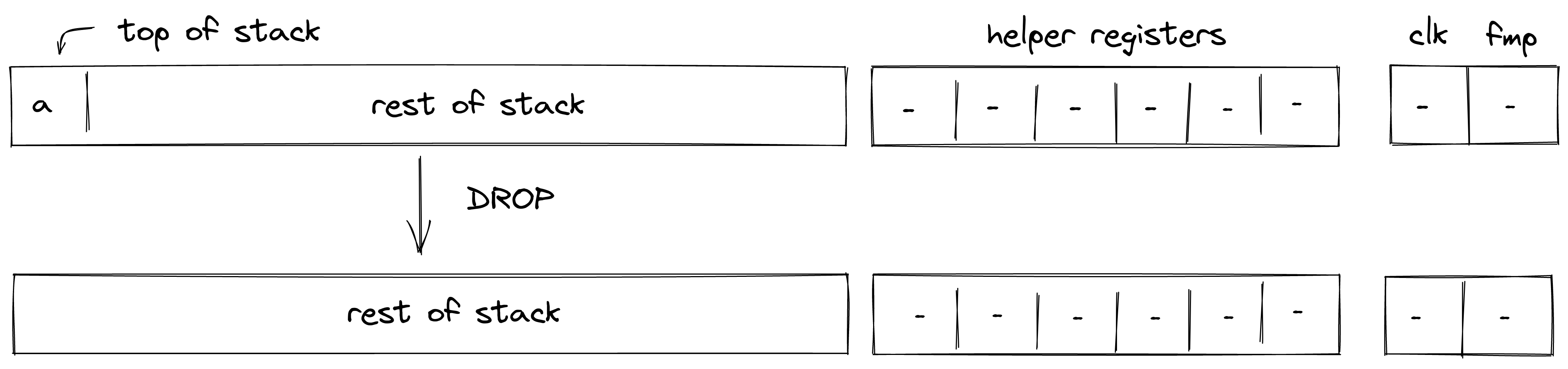

| drop - (1 cycle) | [a, ... ] | [ ... ] | Deletes the top stack item. |

| dropw - (4 cycles) | [A, ... ] | [ ... ] | Deletes a word (4 elements) from the top of the stack. |

| padw - (4 cycles) | [ ... ] | [0, 0, 0, 0, ... ] | Pushes four values onto the stack. Note: simple pad is not provided because push.0 does the same thing. |

| dup.n - (1-3 cycles) | [ ..., a, ... ] | [a, ..., a, ... ] | Pushes a copy of the th stack item onto the stack. dup and dup.0 are the same instruction. Valid for |

| dupw.n - (4 cycles) | [ ..., A, ... ] | [A, ..., A, ... ] | Pushes a copy of the th stack word onto the stack. dupw and dupw.0 are the same instruction. Valid for |

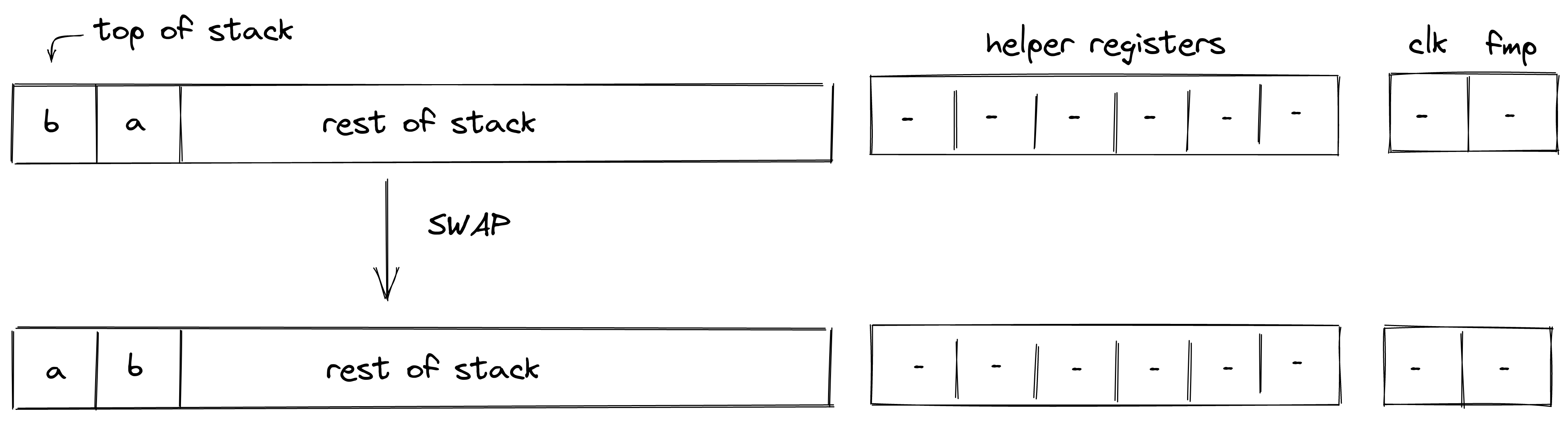

| swap.n - (1-6 cycles) | [a, ..., b, ... ] | [b, ..., a, ... ] | Swaps the top stack item with the th stack item. swap and swap.1 are the same instruction. Valid for |

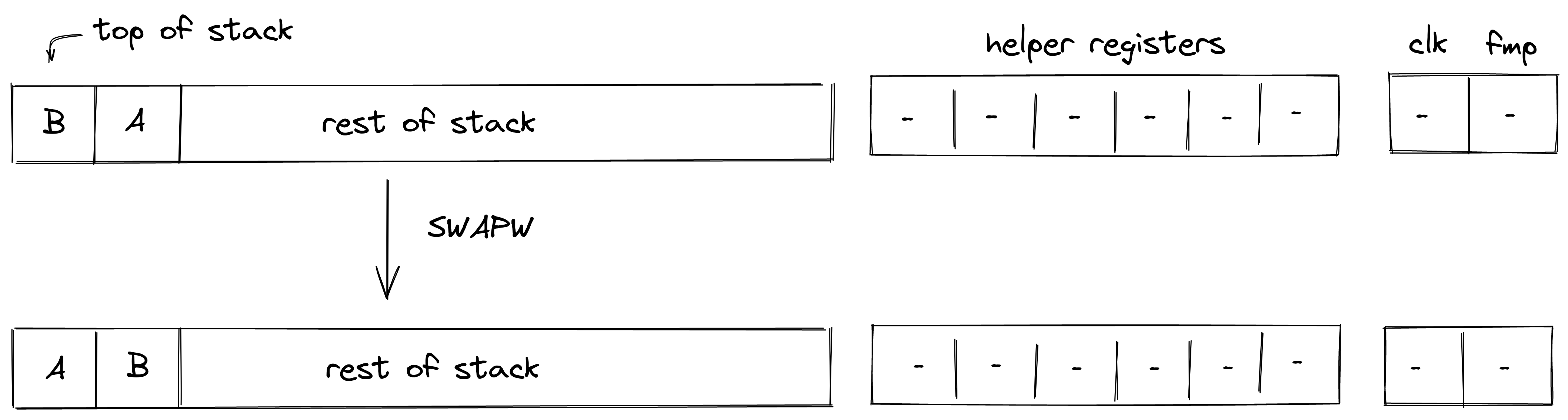

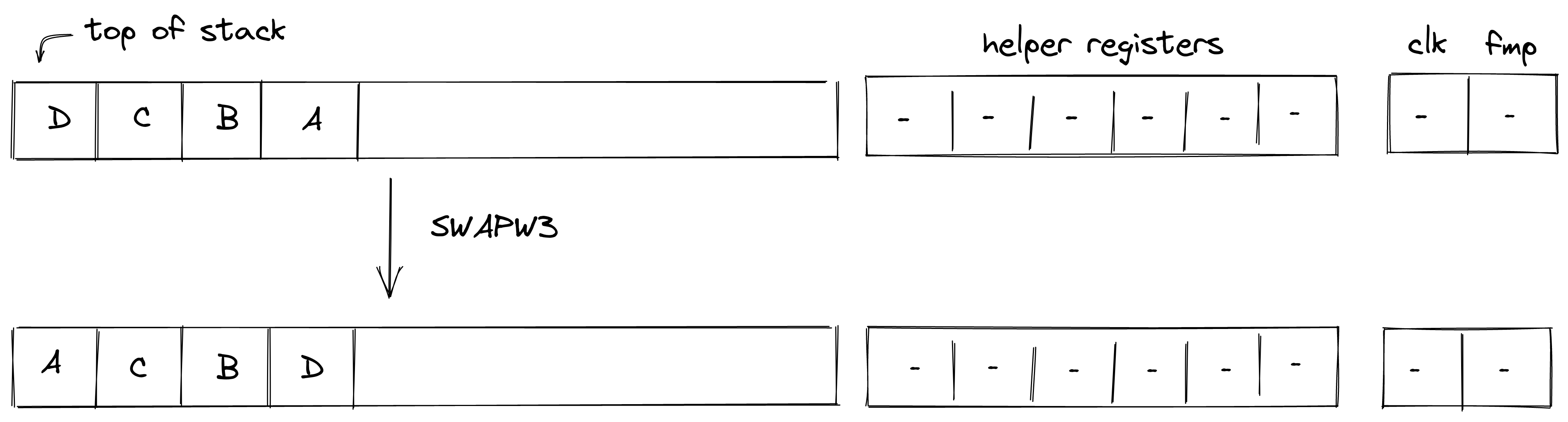

| swapw.n - (1 cycle) | [A, ..., B, ... ] | [B, ..., A, ... ] | Swaps the top stack word with the th stack word. swapw and swapw.1 are the same instruction. Valid for |

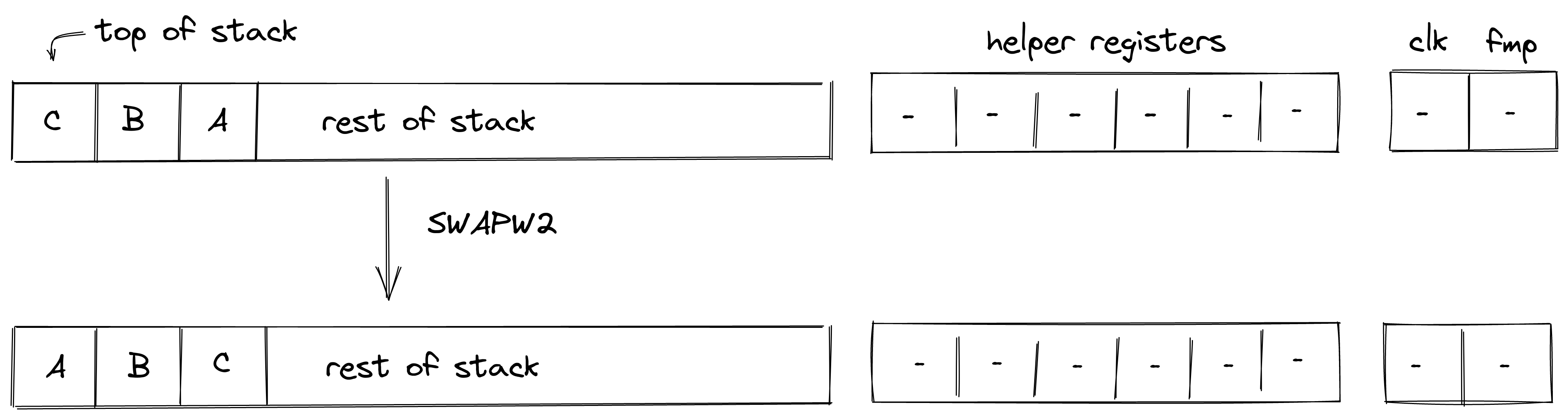

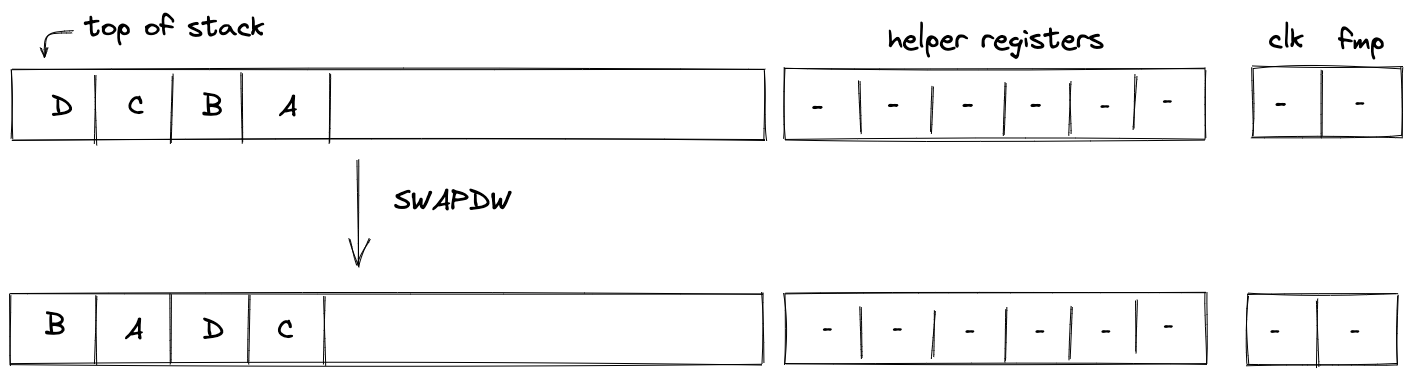

| swapdw - (1 cycle) | [D, C, B, A, ... ] | [B, A, D, C ... ] | Swaps words on the top of the stack. The 1st with the 3rd, and the 2nd with the 4th. |

| movup.n - (1-4 cycles) | [ ..., a, ... ] | [a, ... ] | Moves the th stack item to the top of the stack. Valid for |

| movupw.n - (2-3 cycles) | [ ..., A, ... ] | [A, ... ] | Moves the th stack word to the top of the stack. Valid for |

| movdn.n - (1-4 cycles) | [a, ... ] | [ ..., a, ... ] | Moves the top stack item to the th position of the stack. Valid for |

| movdnw.n - (2-3 cycles) | [A, ... ] | [ ..., A, ... ] | Moves the top stack word to the th word position of the stack. Valid for |

Conditional manipulation

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

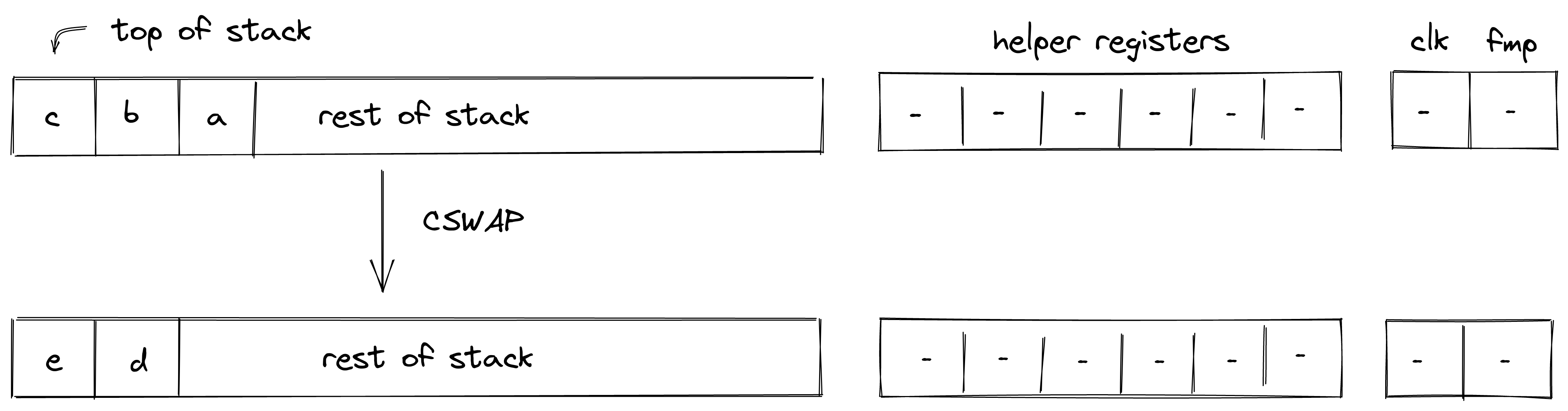

| cswap - (1 cycle) | [c, b, a, ... ] | [e, d, ... ] | Fails if |

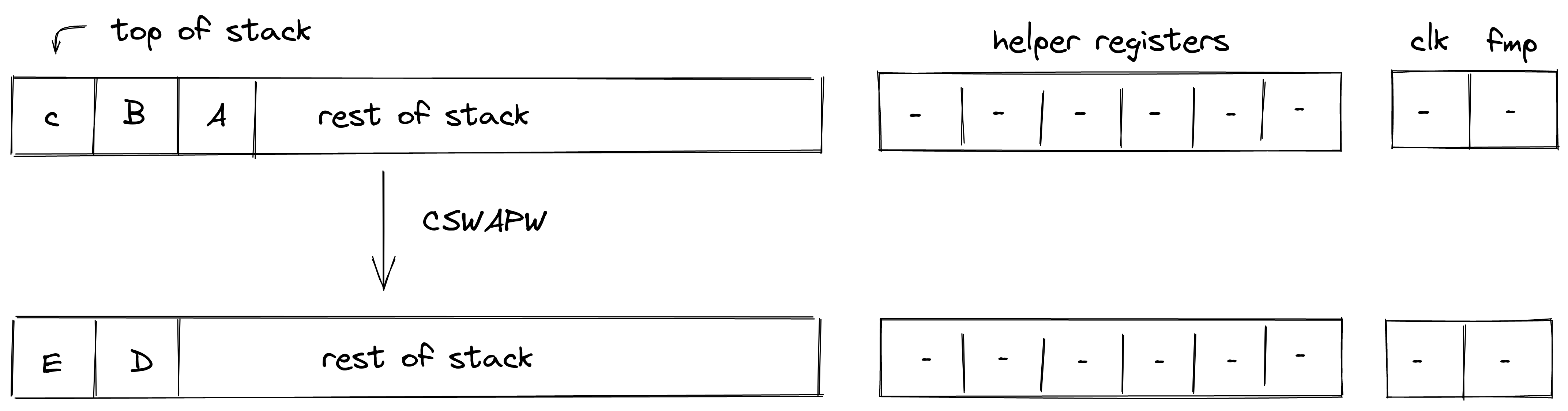

| cswapw - (1 cycle) | [c, B, A, ... ] | [E, D, ... ] | Fails if |

| cdrop - (2 cycles) | [c, b, a, ... ] | [d, ... ] | Fails if |

| cdropw - (5 cycles) | [c, B, A, ... ] | [D, ... ] | Fails if |

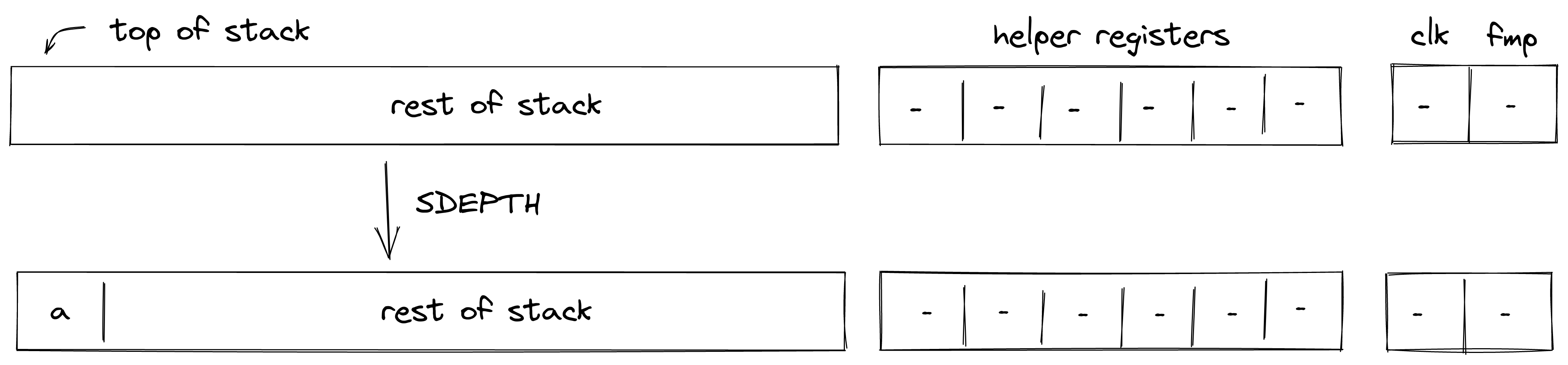

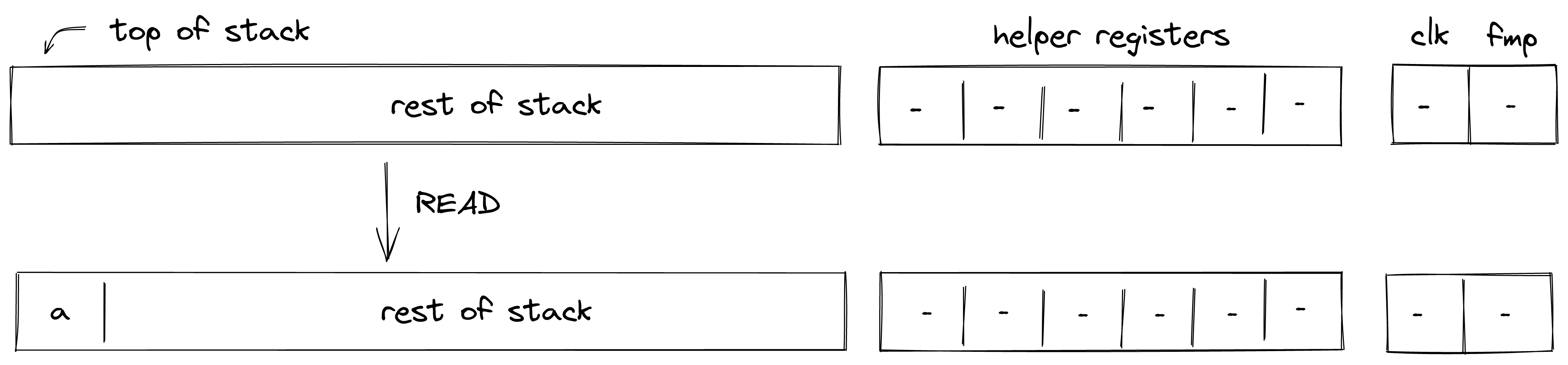

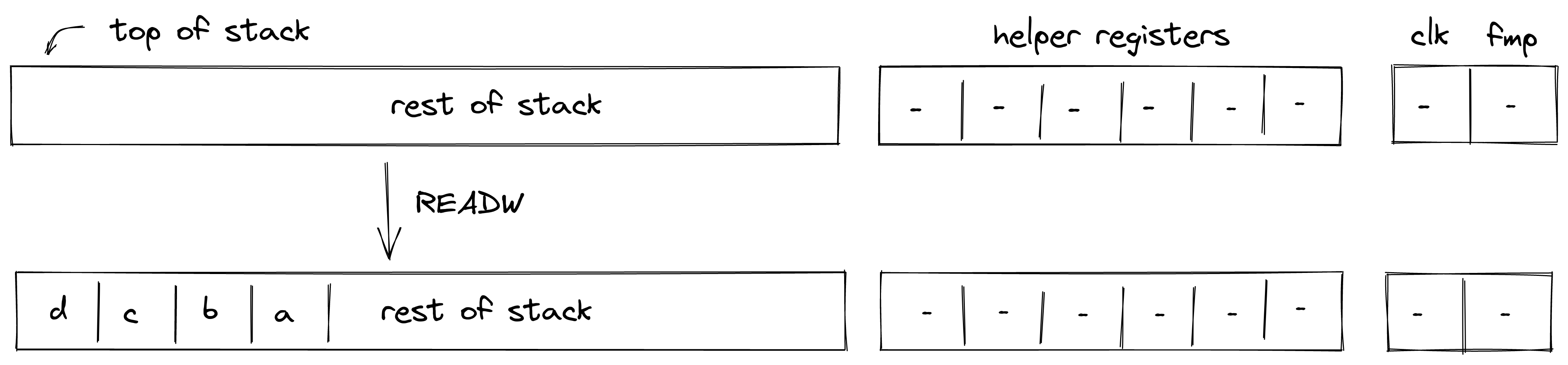

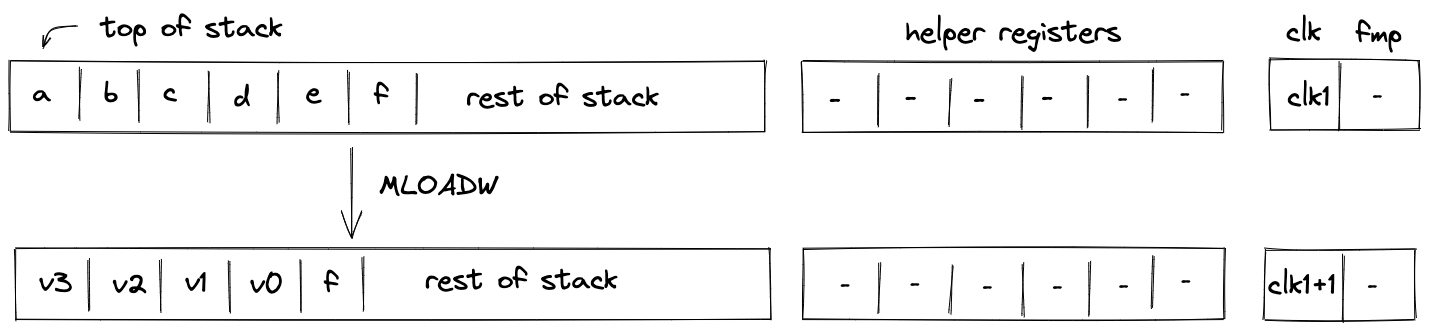

Input / output operations

Miden assembly provides a set of instructions for moving data between the operand stack and several other sources. These sources include:

- Program code: values to be moved onto the operand stack can be hard-coded in a program's source code.

- Environment: values can be moved onto the operand stack from environment variables. These include current clock cycle, current stack depth, and a few others.

- Advice provider: values can be moved onto the operand stack from the advice provider by popping them from the advice stack (see more about the advice provider here). The VM can also inject new data into the advice provider via system event instructions.

- Memory: values can be moved between the stack and random-access memory. The memory is element-addressable, meaning that a single element is located at each address. However, reading and writing elements to/from memory in batches of four is supported via the appropriate instructions (e.g.

mem_loadwormem_storew). Memory can be accessed via absolute memory references (i.e., via memory addresses) as well as via local procedure references (i.e., local index). The latter approach ensures that a procedure does not access locals of another procedure.

Constant inputs

| Instruction | Stack_input | Stack_output | Notes |

|---|---|---|---|

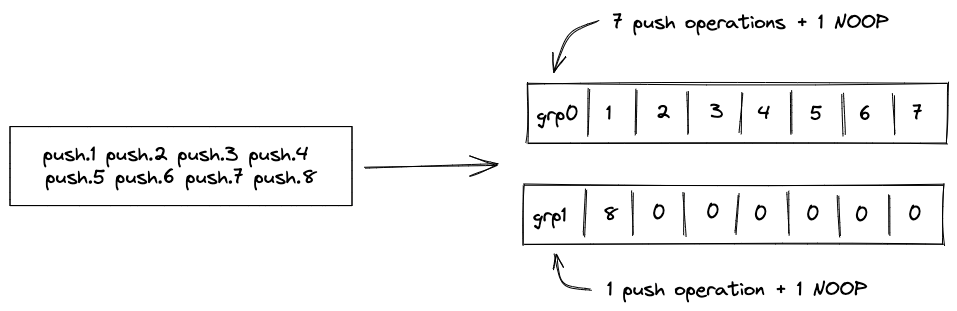

| push.a - (1-2 cycles) push.a.b push.a.b.c... | [ ... ] | [a, ... ] [b, a, ... ] [c, b, a, ... ] | Pushes values , , etc. onto the stack. Up to values can be specified. All values must be valid field elements in decimal (e.g., ) or hexadecimal (e.g., ) representation. |

The value can be specified in hexadecimal form without periods between individual values as long as it describes a full word ( field elements or bytes). Note that hexadecimal values separated by periods (short hexadecimal strings) are assumed to be in big-endian order, while the strings specifying whole words (long hexadecimal strings) are assumed to be in little-endian order. That is, the following are semantically equivalent:

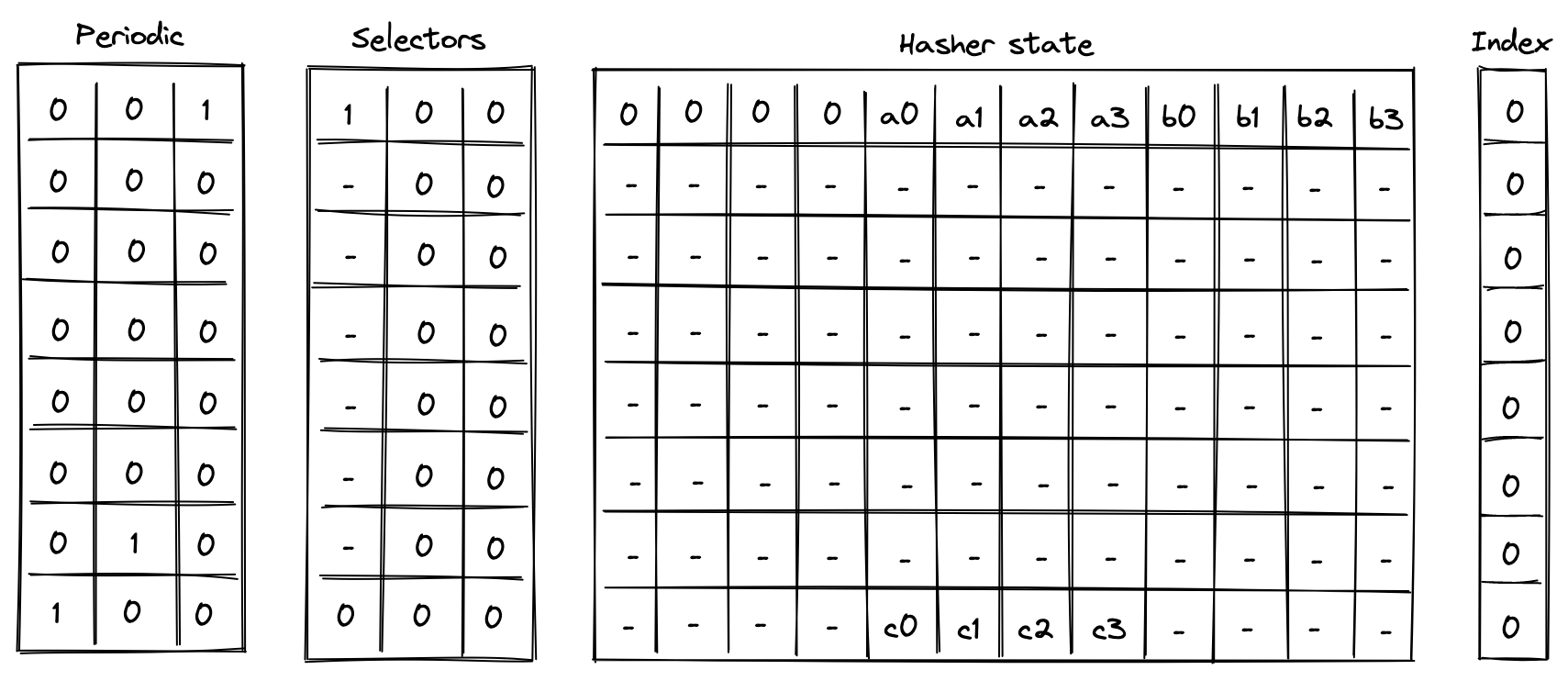

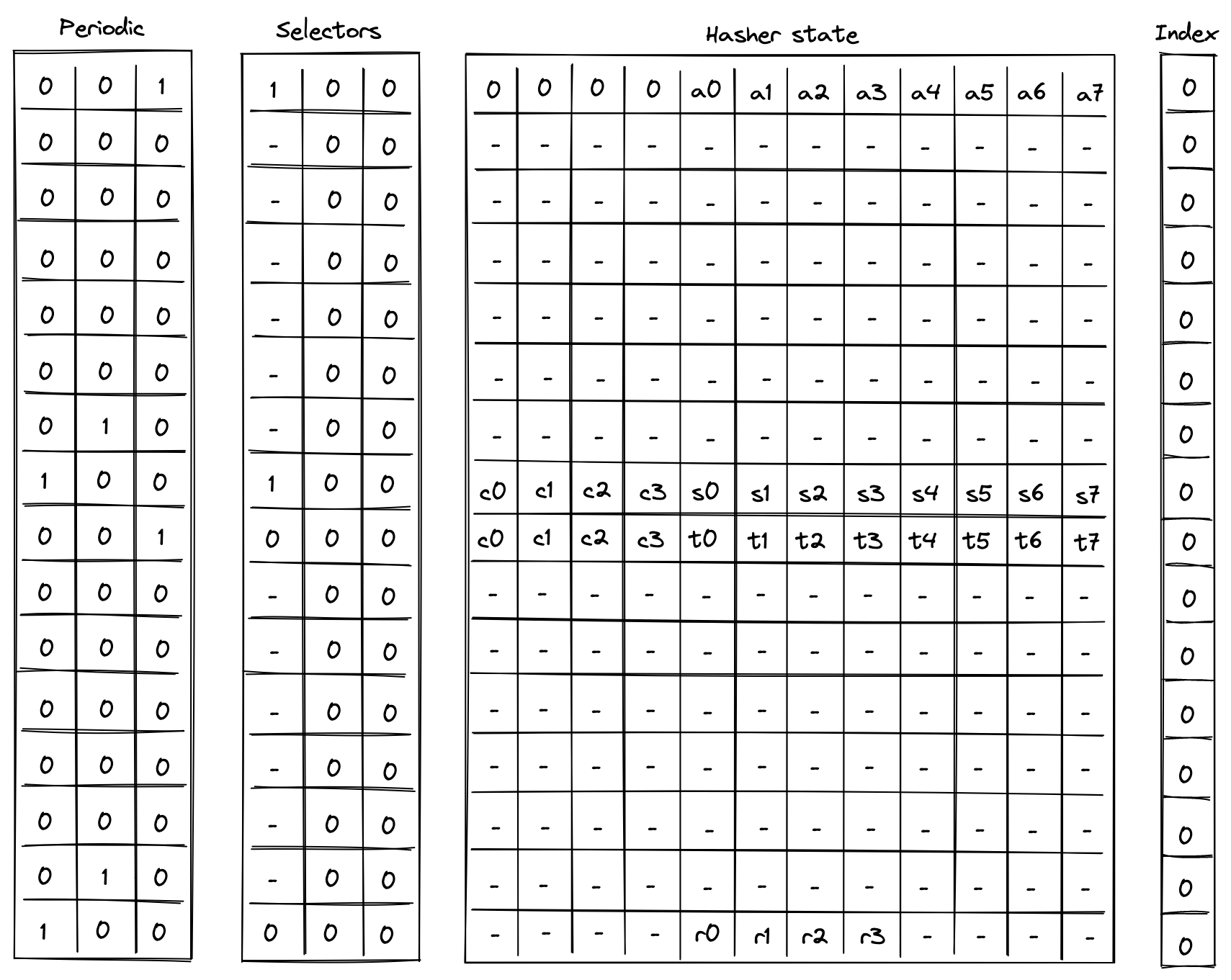

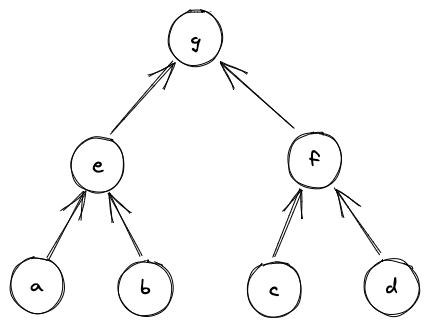

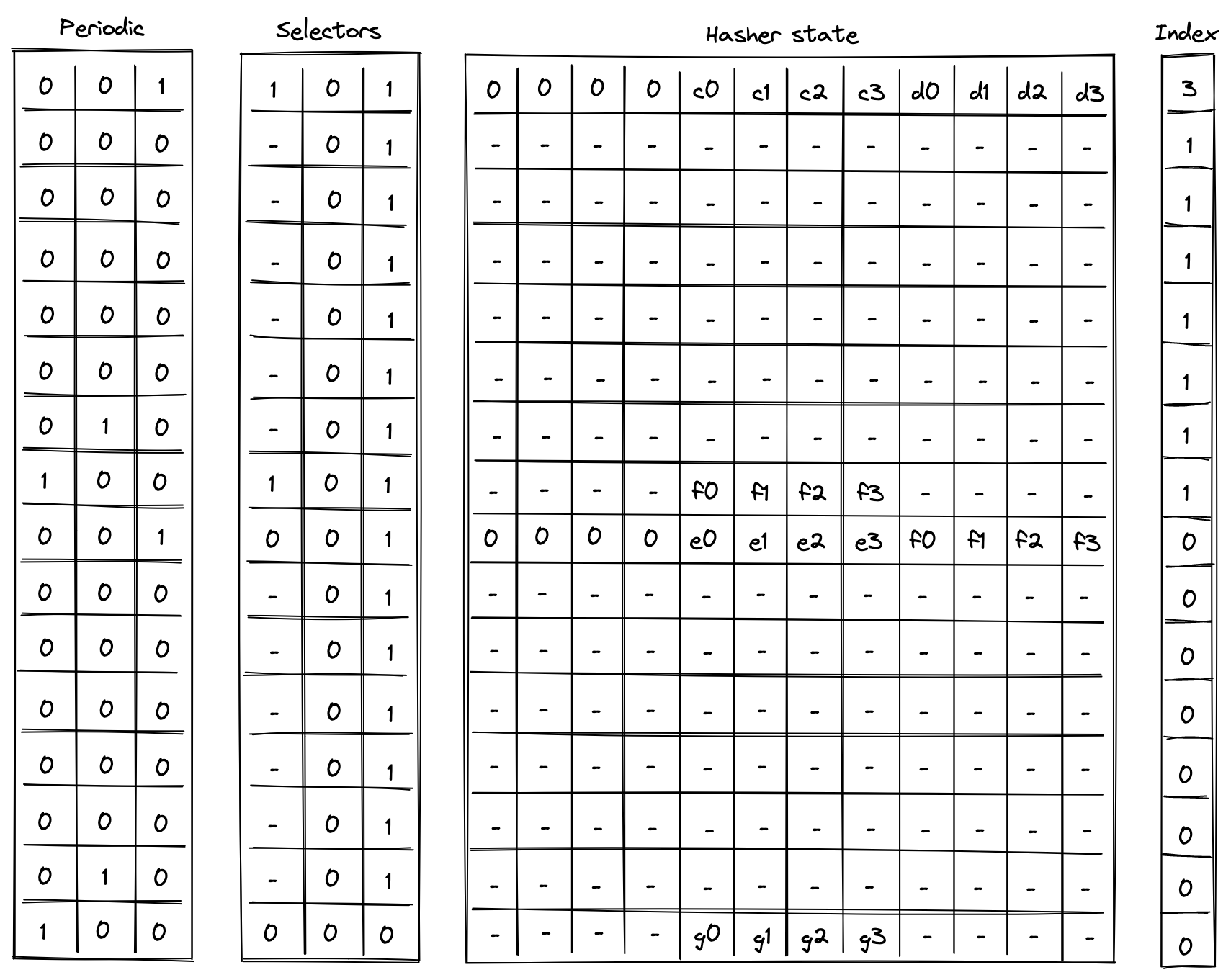

push.0x00001234.0x00005678.0x00009012.0x0000abcd